By Stephen Schieberl and Joshua Noble

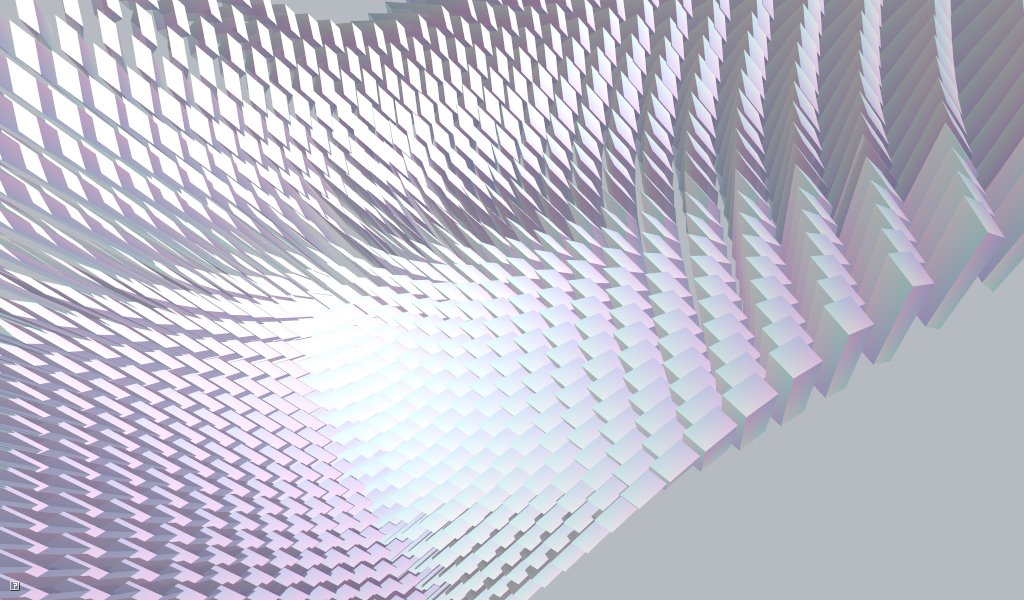

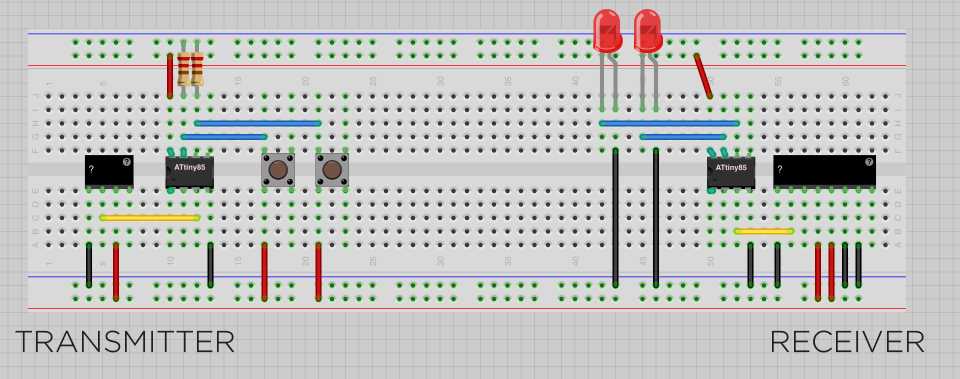

We've heard it plenty of times when people are talking about working with the Kinect: "we'll just get the point cloud and turn it a mesh". You may have even thought that yourself at some point, "this is going to be easy" Well, it's actually fairly difficult to do and especially difficult to do quickly, because a mesh is a complex object to create and update in realtime. In a mesh of either triangles or quads, each surface needs to be correctly oriented to it's neighboring surfaces and to the camera. When working with a point cloud coming from the Kinect you have several thousand points which means several thousand pieces of geometry needing to be created all at once. The typical approach that people use is to just display the points and not worry about trying to make surfaces or shapes out of those points. While this is fine for some projects, we'd like to see more people dig into making real shapes from their Kinect imagery so we're going to show you how to do that in two different ways. This is a fairly advanced tutorial that focuses a little more on theory and implementation rather than a specific toolset. What we're going to talk about in this article can be applied to any of the creative coding toolkits, but we'll be making projects for Cinder to demonstrate. If you don't know anything about Cinder, I suggest you take a look here: http://libcinder.org/ and check it out. With that said, let's get into some theory.

12 comments on “Shaders, Geometry, and the Kinect – Part 1 [Cinder, Tutorials]”