United Visual Artists are an art and design practice based in London and working internationally. They create work at the intersection of sculpture, architecture, live performance and digital installation. Their initial work in live performance led to a sustained interest in spectacle, the relationship between passive spectation and active participation, and the use of responsive systems to create a sense of presence. Almost ten years in its existence, UVA developed a recognisable style, bringing to public installation domane tricks and scale of life performance and vice versa. From the very beginning UVA’s ambitions and ideas were slightly ahead of available technical solutions, therefore in order to fulfil their vision UVA developed custom software and hardware bundle, today referred to as d3.

Today, d3 is an integral part of UVA. This close relationship between the tool and creative process has enabled UVA to create mesmerising work, exhibited widely across the world and shown at institutions such as the V&A, the Royal Academy of Art, the South Bank Centre, the Wellcome Collection, Opera North Leeds, Durham Cathedral and The British Library. Their works have toured to cities including Paris, New York, Los Angeles, Tokyo, Hong Kong, Melbourne and Barcelona. At the same time d3 has developed a life of it’s own, now a semi-separate entity offering services for some of the largest and most complicated shows of the last few years, from rock to pop, from theater to broadcast.

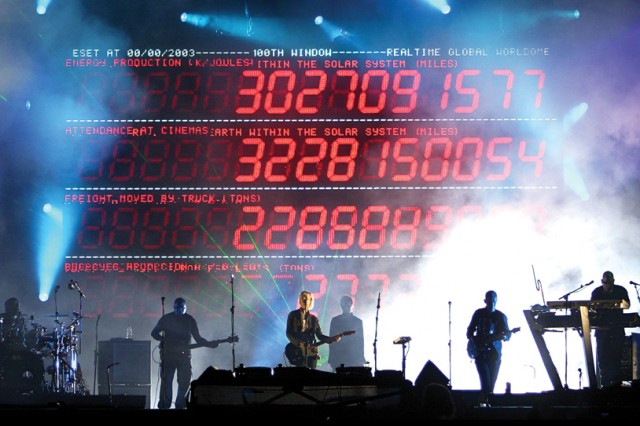

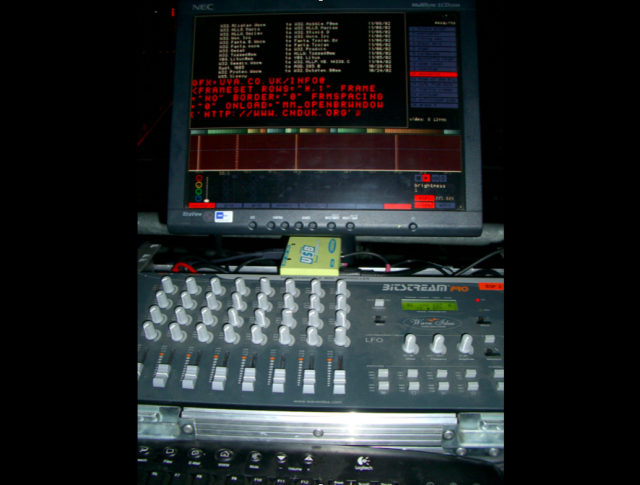

It all started with their work for Massive Attack’s 100th Windows tour back in 2002. The idea was to support performances with real time information feeds from the Internet. In 2002 there were no ready to go solutions for such a task and UVA decided to do almost unprecedented at the time move – to write a new piece of software specifically for the show. When Ash Nehru (Director of software) joined Matt Clark (Creative Director) and Chris Bird (Production Director) to get in charge of programming, he had a framework called “blip”, that was written for a minor project. It was just a bunch of tools one can make things with. The first generation of d3 software – “Mosquito” or m1 (photo below) was made using this framework. The main principle of m1’s workflow was very precise treatments of each track. Visual events were precisely bound to certain quarter beats constructing the narrative for the show. The content was unique for every show, however it was not processed in real time. Various data including site specific information, stock-market prices, or weather reports were uploaded daily, and arranged before the performance. Everything in the system was controlled by a bank of MIDI dials and sliders.

With every consequent work new features were getting introduced to the code. m1 evolved into m2, than d1, d2 and eventually d3. They were completely different applications with different approaches to UI and dealing with requirement of shows. They were all customisable to a greater or lesser extend. They all incorporate the idea of a module or a filter that you can create many of and manage in time and space. All common functions that go with the show, such as: triggering, controller fixtures, LED, mapping, projection mapping, all of these tools are fundamental to the system, they are part of the framework.

d2 was the weapon of choice for at least two or three years before d3 came out. It was a 2D system, a schematic view of different screens. It was much more like VJing. There was a keyboard with a bunch of clips on it, one could play them. Later a timeline which one could play directly onto was added. It didn’t have that precise control over the narrative that m1 was very much about. It was concentrated more on life performance, very much like traditional VJing.

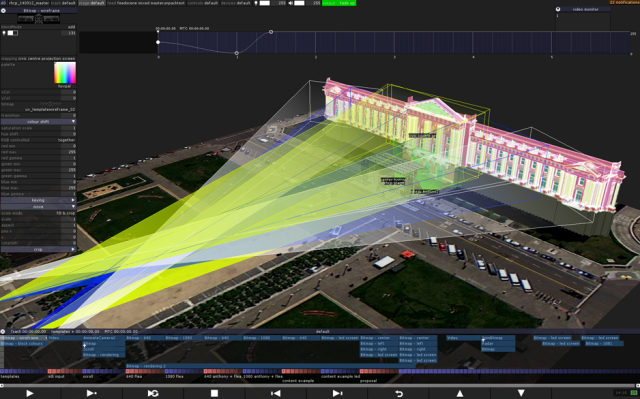

Latest iteration of the software, d3, is a return to Massive Attack and m1 roots: having a timeline, bezier curves and keyframes going down to quarter beats. But that was the first time when the timeline was married with generative content, put together in this particular way. d3 grew out of necessity to demonstrate project content to a client prior to built stage. Initially 3D Studio Max was used, but it took five times longer to render than to make actual content. So, d3 solved that issue, and then gradually music and generative content visualisations were implemented.

d3 is a framework which allows the creation of multiple applications within it, they are called modules, and arranged on the timeline, synchronised together, sequenced. A typical show on d3 would have between fifty and a hundred content layers, each of them is a module, which is broadly equivalent to openFrameworks or Cinder application. So, it’s not a toolkit in the same way with other toolkits, it’s much more a piece of software.

The notion of unrestricted simple space that Processing provides is what a module provides you with. When you’re making a new module it’s exactly that, set of inputs, a render output, and basically a blank canvas. There is no preconceived notions of anything. As far as generative content goes, it’s very restricted. There is so much more to a successful installation than just being able to draw something on a 2D grid of pixels. d3 is very focused on workflow and it’s main aim is to ensure smooth running experience from show to show. It’s all about making visual accompaniment to music very quickly. If one wants to use particular effect he doesn’t need to worry about that in the code, it’s just a part of the framework. Ash describes some of the features:

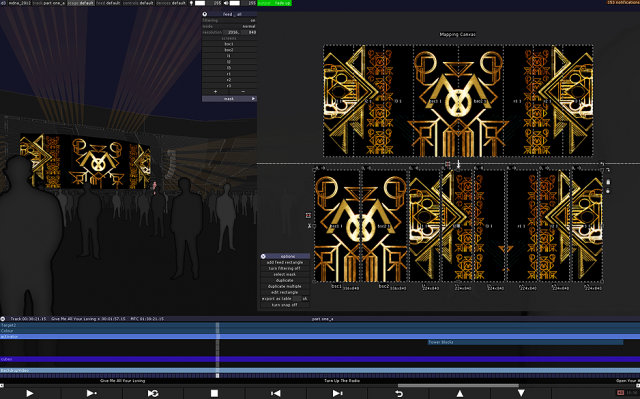

…It’s all about how to make visual music very quick, how to make visual accompaniment to music very quickly. If one need to pick out something on that bar and not on that bar, he just doesn’t need to worry about that in the code, it’s a part of the framework. For example, here I got video module, I can choose which video clip goes on at which time. I’m changing video clip every 4 bits. So, at all times I’m working in a space that lets me view that show in the context that I’m actually gonna be seeing. I can do things like going to that video clip, examine what are the various things about it, its codec. I can change the framerate if I want, I can make it go on a beat, I can do quantised playback. I can make this clip lasts for 4 beats, or 8 beats precisely.

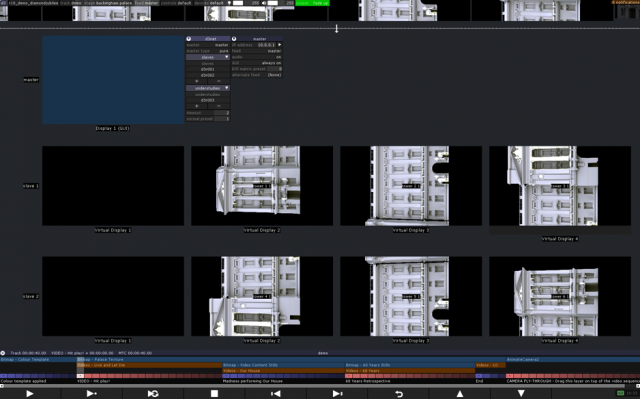

So, there are lots of stuff to deals with rhythmic animation of things. Here is just another generative module, there’s another, that’s a huge library of things. When we make a new projects we just create a new modules and sticking them to the timeline. Some interesting thing we can do, because of the geometrical modelling of the stage is that virtual projection feature. Position pan, and an emitter and the system will figure out what colour to turn on individual pixels. You can also compensate for screen movement. If I move screens forward the content adjusts perfectly. That useful when we are dealing with tracking systems. You can see that content is spilling on the floor, it’s going onto the screens and on the lights. So, you don’t do any distinctions from design point of view weather the fixture is LED projection, DVI, DMX. As far as it’s just a pixel you can deal with them as if the whole system was a single canvas. It’s a very important aspect of the system. The content guy doesn’t need to worry about making this clip, he doesn’t have to consider what stage it’s going on to.

The other thing that the system good at, again by necessity, is actually controlling this fixtures. The story is: we used it as a demo system, and we started building the show, we added new features as we needed them. We got to the point when we build the entire show, we went to rehearses with that system, and their intention was to dump that whole system into Catalyst Media System. When we arrived they were building this for the first time, they didn’t know how to test this. So, we ended up sending the life signal for these screens. Then it turned out that each of this big ellipses is a massive radio antenna, you can’t just send them the signal, you have to break the signal up and wrap it. So, you have to break this up to separate substrips, then we created this feed system, which allowed us to map content. There is schematic view of what’s going on. These are the screens we have on our stages. Any of these things can be formated, we can split them, scale them, rotate them and create arbitrarily complex outputs, which is handy…

It is only about 3 years since UVA started to treat their in house tool as serious venture. It was not only about developing solid platform, but also all the support for customers around the world. Today UVA and d3 are separate actors, but closely related. In fact, UVA is a number one customer of d3, also new features and modules often evolve out of UVA’s experimental work.

UVA designers and d3 coders are a very tied in team. For example, when UVA worked on Massive Attack, Matt would use After Effects to show Ash what he wanted. Ash would then understand that enough to replicate in real time, but with a few extra dials that one can push up and get something that no one expected. Then bugs would happen, unpredictable things would happen; they jamming the end result from traditional tools, but end up in a very different place. It often starts from Processing, then goes into C++ and then into d3 module API. They have made this process as easy as possible. Designers made models and prototype with Processing because it’s quick, while d3 developers are agreeing up d3 and programming tools to do very specific visual things.

Recently, “d3 Designer”, a software only version was released. Thus far it is PC only and allows users to design and preview their show, that then would be passed onto d3 hardware that might be acquired or rented. Before that it was hardware only solution, meaning that customers had to have the d3 machine to work on their projects. UVA have a fleet of machines that they rent if people need them, but rental companies don’t have them. But it’s not what UVA normally do. There is a bunch of partners in different countries who deal with that: one in New York, one in California, South America, China. These partners generate a considerable amount of customers. Generally, unless its a new customer or a project that is unusual, they can deal with it on their own.

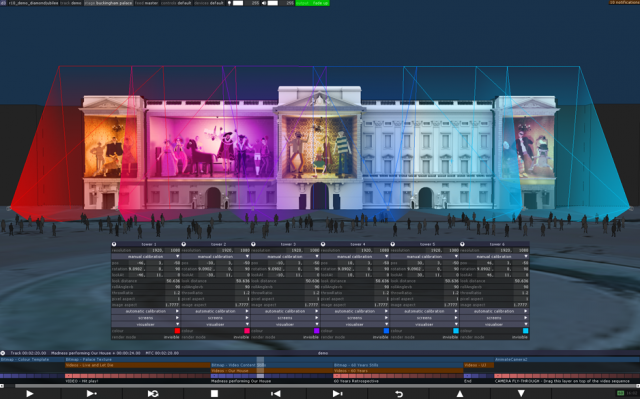

Typically, when people outside UVA use d3, they making a show that doesn’t have any interactive components. It has queues and video playback, it’s complicated, there’s lights, it’s on a big scale. Madonna, Buckingham Palace and similar. It is where one needs visibility upfront, deals with complex structures. Larger shows require a lot of custom development and support. In that case, d3 support staff would be called to the project.

d3 is not an open source product, due to very high responsibilities that are associated with high profile performances. At the moment the usage of d3 are thoroughly guided, which means that users are provided with all required support to succeed. D3 is aimed for a different market, it’s a different set of requirements from open source projects such as Processing, openFrameworks and Cinder.

Nonetheless, d3 are relatively open for collaboration. For instance, UVA did a little workshop with invited developers. The purpose was to create a network of freelance developers, who could be hired on projects as required and contribute different skills to the framework. If Kylie Minogue comes to you and wants a cool generative module instead of rendering, then this pool of people who can draw would be very handy, and that’s good work for people because they pay well, and its high profile. In return they could use d3 on their own projects without paying for it. Difficulty was finding people that are interested and at the same time qualified, it’s required solid C++ knowledge. In right hands it’s incredible tool that allows one to do very complex tasks, it is highly creative.

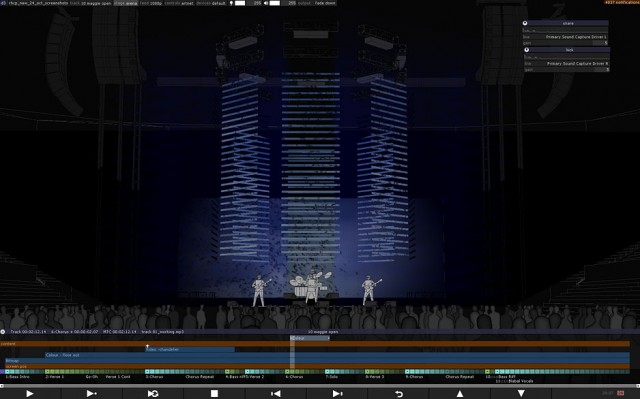

For the stage design of the Red Hot Chili Peppers’ tour “I’m with you”, UVA have taken the band’s iconic octagonal logo and exploded it upwards and outwards, creating a bold and futuristic octagonal chandelier above the stage. This exploded logo is a versatile sculptural form, which is part lighting surface and part high definition video screen. Directly below it is an LED floor in the same octagonal shape which lights the band and changes with their movements.

The design blends multiple layers of technologies; traditional lighting fixtures, high-power LED fixtures, low and high-resolution LED screens suitable for Image Magnification (IMAG) for live shots of the band, as well as for graphical and other video content. Functioning as a pre-visualiser, sequencer as well as an output tool, d3 has for the first time been successfully integrated with a Grand MA lighting console to get maximum versatility in live show control. One MA console is used for the lighting, and the other to control video and live cameras. The central hub of the system remains d3, allowing for Art-Net control of BlackMagic SDI video routers, receiving position information from moving scenography from the TAIT Navigator system, and running video, camera effects plus some sound to light effects linked to the bass and snare drums – all from within the d3 toolkit.

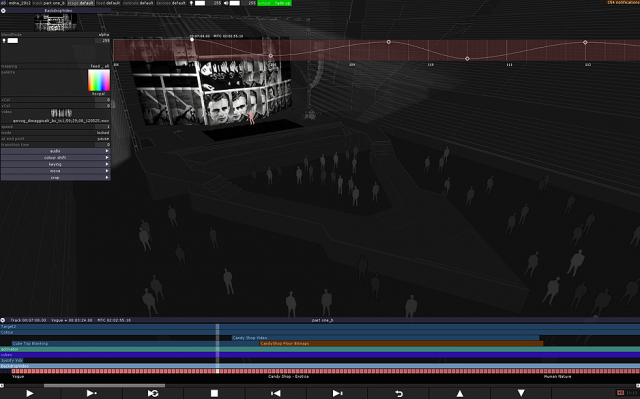

…We had this crazy cylindrical screen that actually opened up. The whole thing is a structure. It’s completely mad. Here we have these bitmap modules which are standing in for the life camera, and then we have this thing we called piping. So, you can pipe the output of a module into another module. It’s rudimentary node based approach, but its much simpler and its operates over time as well. This is assembling the 4 inputs into a single canvas. I can drop one out if I want. These are cameras, if you want quickly drop one out, to get 3 person look instead of 4 person look. Modules didn’t care about things like aspect ratio. This is an example of custom module we made for a client. Client wanted to cut cameras on this screen, to be able to grab it and rotate, to increase the border between them, or rotate faster and in particular direction. So, we knocked this up, this is like a days work in d3. Then we do a custom projection on this surface. It’s all done in pixels and shaders. As that screen opens, we just update the module every frame and the shaders are taking care of it, and that’s how the content ends up staying in the same place. This is actually what’s going out to LED, because bottom is narrower than the top, you’ve got these gaps. If you want to make something smooth it has to jump, when it gets to that pixel it has to jump across to that one, it can’t disappear into the gap. As you start the opening, you can see the content disappearing to the gaps, the tiles become obvious near the bottom. That’s kinda a sweet spot of the system is dealing with complex, moving, shape changing content, generative life. That what it is really designed for…

As was already mentioned, sometimes experience gained on life performances is translated into public domain. For instance, Origin evolved from the stage design for the Coachella music festival in Palm Springs during April 2011. With a specially commissioned soundscape by award winning composer Mira Calix, the cube-like structure was not just an elevated performance space for bands; the stage itself became the performer. The show lasted several minutes and was performed numerous times during the festival. d3 played essential role in that project: from setup to audio sequencing to driving 810 Barco Mistrips and 184 Colorblast 12’s.

Elaborating Coachella’s experience UVA made Origin, that was shown in New York and San Francisco. Again, only d3’s pre-visualiser could have made the piece possible. It’s a 10 by 10 meters volume that consist of 125 cubic spaces populated with linear LED strips. Practically that was a 3D volumetric screen, one could do anything with it, but UVA focused their effort into creating a character with personality so that people could emotionally engage with it. There were only two days to set it up, one evening to programme the piece. To do this in 2D platform would be terrifying, but with d3 UVA could set up a model in their studio and get the feel of the piece, otherwise it would be very difficult to prototype on that scale.

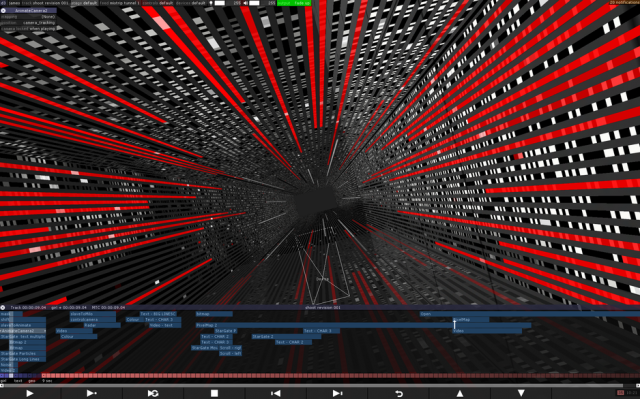

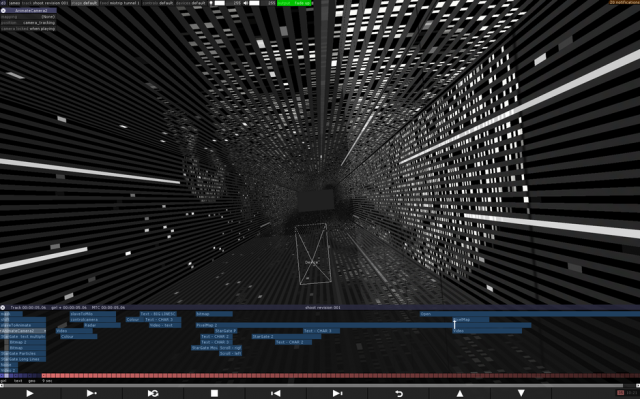

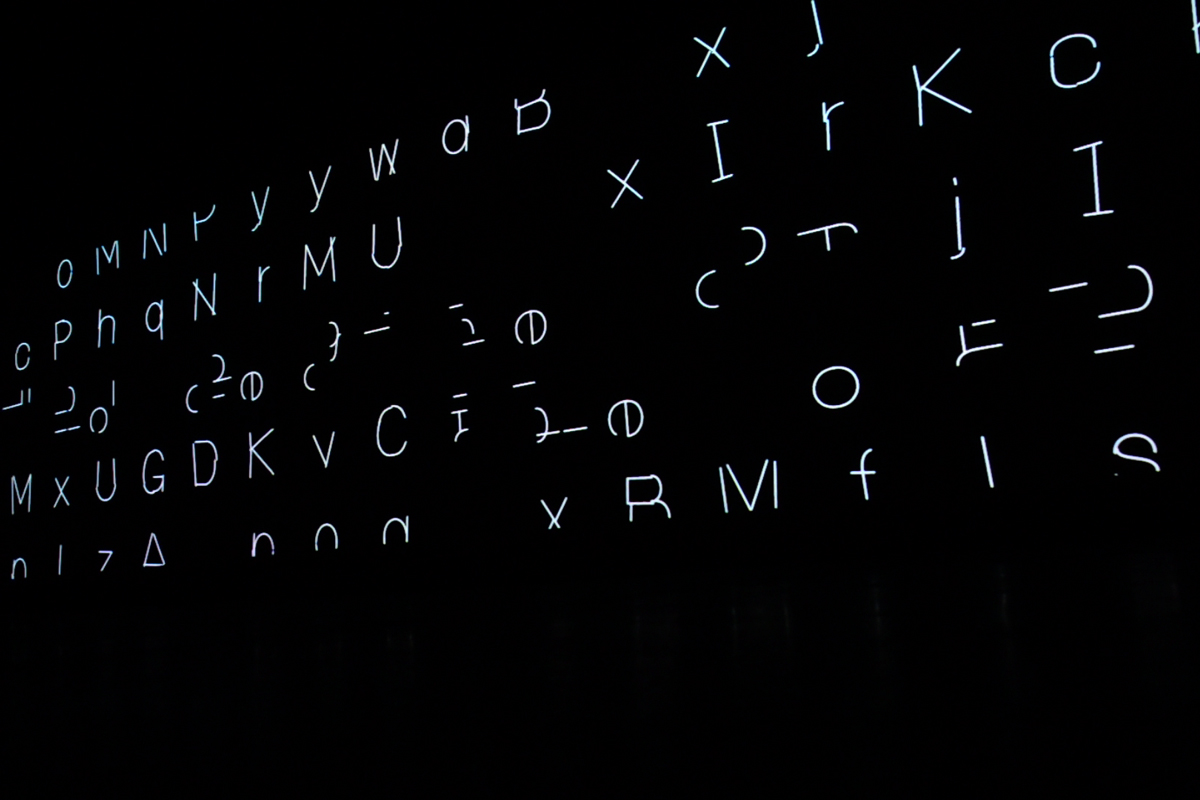

CAN already reviewed “The Dream” video for Sprint, a US telecom company. The idea of the video was to motivate people to switch off their mobile devices, put them into “sleep” during the movie. In return they may request a custom “dream” for their phones via text messages and the use of social media. In this project Artisan – UVA’s sister division – made an ingenious attempt to bring art installation experience to a big screen. Instead of using computer-generated graphics they build a full-size installation that consist of two tunnels covered with LED strips and filmed it. d3 was used to map, simulate and output content onto the tunnels through which the camera moved. The most difficult part was to shoot perspective mapping based on the moving viewpoint of the camera. d3 virtually projected and re-mapped the content based on camera position in real time. That project is an example of unorthodox usage of d3, it explores its potential in filming industry.

D3 has gone though many transitions and iterations in the recent years. There is architecture in the works called Smart Clips. Under the Smart Clips that would be no different module types, but rather everything would be video content. When you ask it for a frame it goes and renders something using a .dll and some assets, or it might go off and render a page in webkit. In other words, from outside look Smart Clips are like a video clip, but you can unpeel them and expose layers of control, you can plug in inputs to them and they can do things life.

They deterministic in a sense, say if I got the frame 10, it will always look the same, or you can make them non deterministic taking an inputs from the Internet or weather. But, when you exchange them, you deal with media files, containing all code internally. If you think how big a .dll in comparison to a video file. We dealing now with multi-gigabyte files, whereas .dlls are like hundred Mb.

In addition to the above, the team is developing something called components, pieces of code that contain both a shader set, and some CPU data – but to bundle them into a format that allows them to be assembled together like a lego bricks. So, rather than this overcomplicated spaghetti diagram, a thing that you end up getting with node based systems, which are very hard to reason about, very hard to debug. The ideas is just: “I’m going to take a video brick and stick a scale brick underneath it, and stick a blur thing on it, and then mirror, shadow etc.” It would feel more like putting bricks together, than connecting things with wires, which is the nodal philosophy. The other important thing about components is that they work overtime. In node based systems you have x and y, but x and y don’t mean anything, they just position for things, that’s very inconvenient. In components vertical axes is flow of signal, the main visual signal flow top to bottom, and left to right is time. Things can change over time, appear disappear.

To read more about d3, see the product page at the link below. Big thanks to Matt and Ash for their time to talk about UVA and d3.

United Visual Artists | d3 technologies

(Interview by Filip Visnjic – Transcript and additional text by Andrey Yelbayev)

UVA Previously on CAN: Sprint “The Dream” Making of, High Arctic by UVA [c 1=”Events” language=”++,”][/c]

Glad to see d3 commercially available. Hopefully the hardware will be available someday soon as well. Definitely looking forward to new developments. Great work guys!

?.. the d3 hw and sw have been available for years