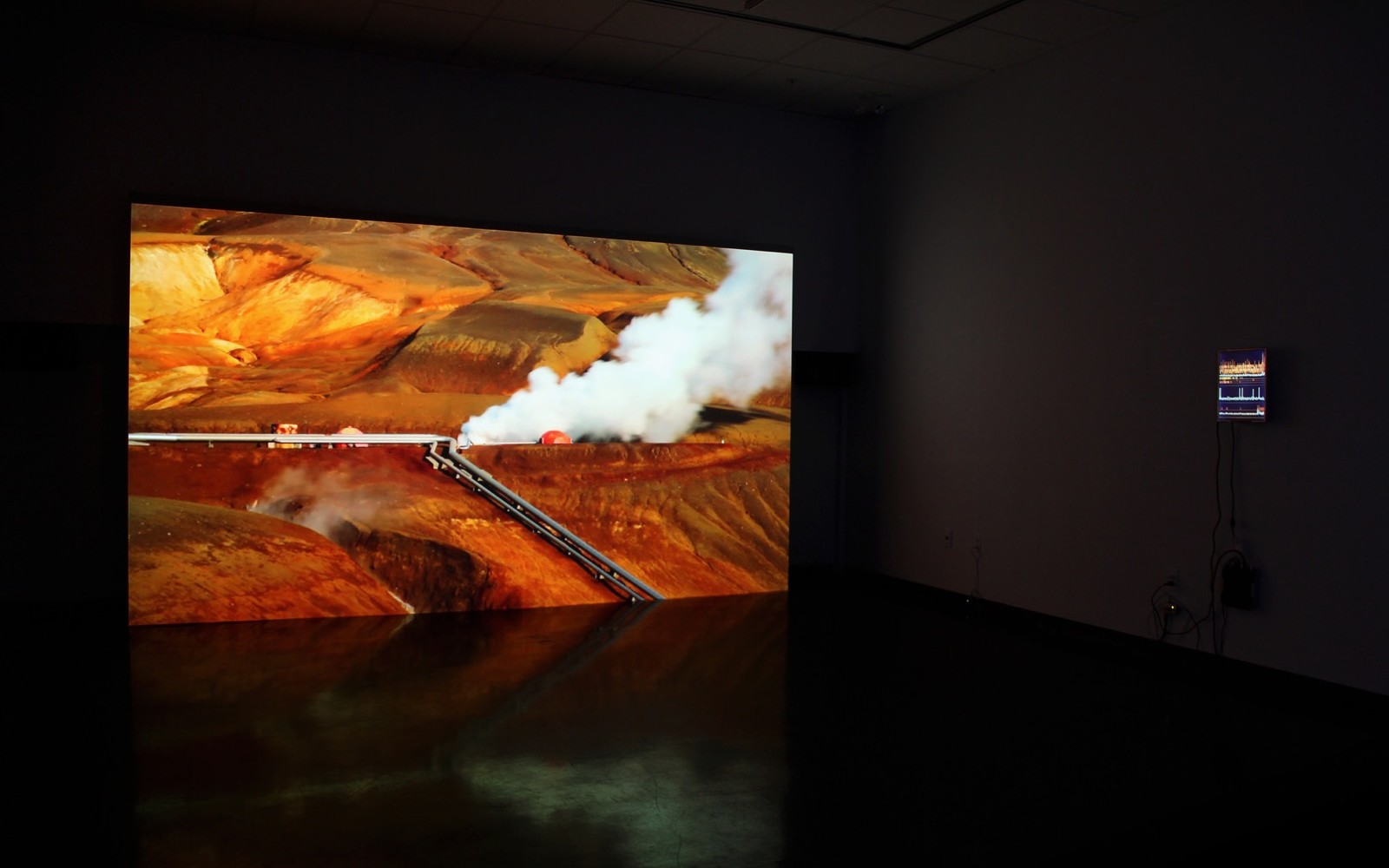

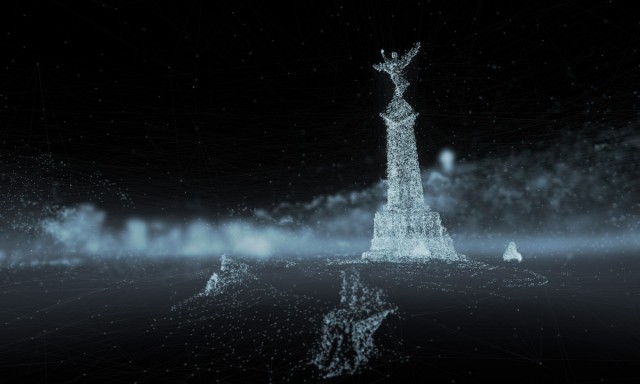

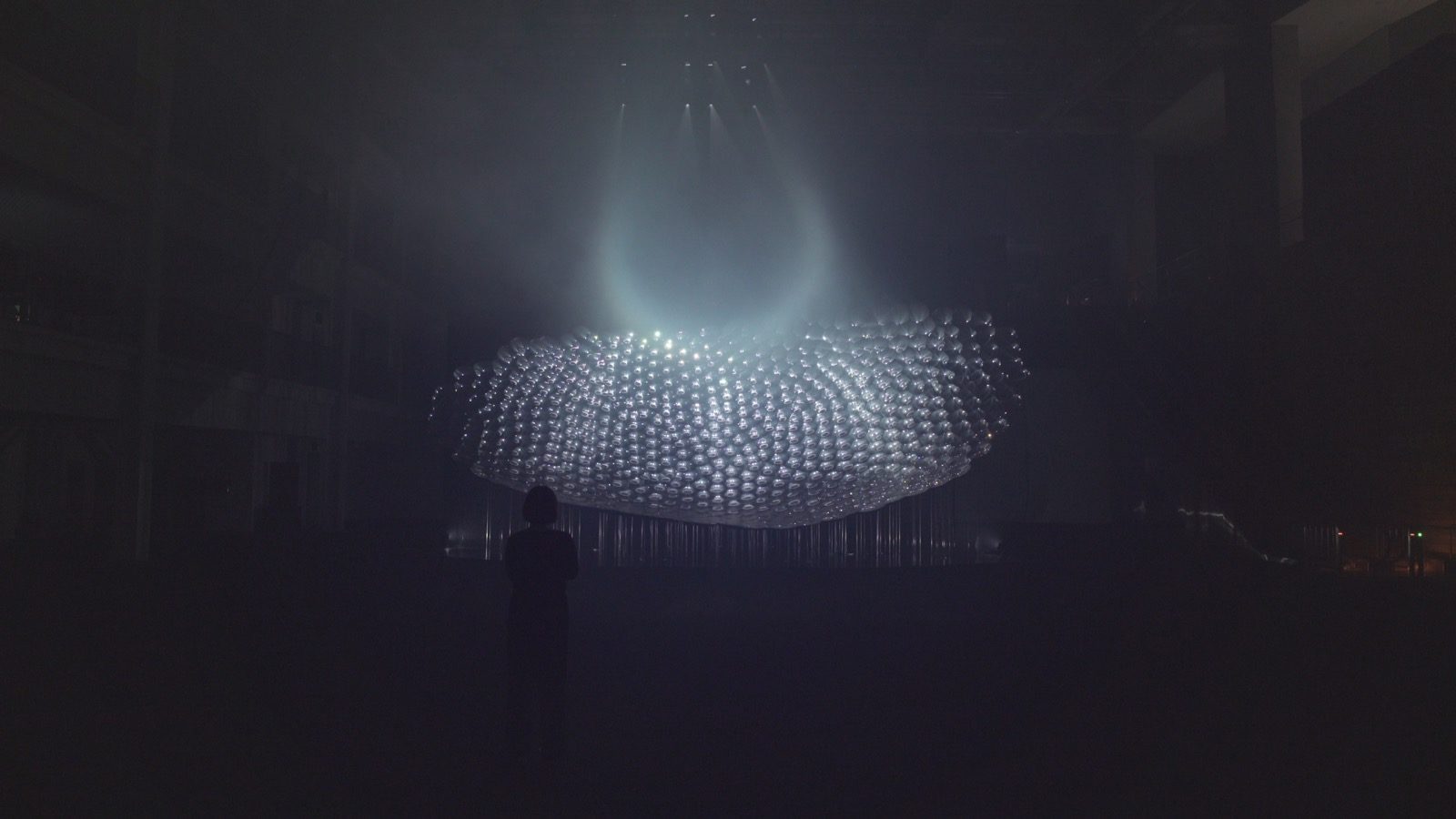

Created by François Quévillon, ‘Algorithmic Drive’ is an interactive installation and performance inspired by inspired by autonomous cars and dash cam compilations. The work plays with the tension generated by confronting the technologies used by mobile robotics with the unpredictable nature of the world.

“Several technical, economical, legal, moral and ethical issues are raised by vehicles that maneuver without human intervention by feeding and following algorithmic procedures. On their side, dash cam videos display accidents or spectacular events that portray roads as spaces where the unexpected occurs.”

François Quévillon

To create the work, François built a database of recordings with a camera that is connected to his car’s on-board computer. The videos captured are synchronized with information such as location, altitude, orientation, speed, engine RPM, stability and the temperature of various sensors. They feed a sampling system that uses signal processing, data analysis and computer vision algorithms to sort the content statistically. It assembles an endless video by modifying parameters related to images, sounds, the car’s activity and the environment in which it’s located. A controller displays data related to each scene and allows people or a performer to interact with the system.

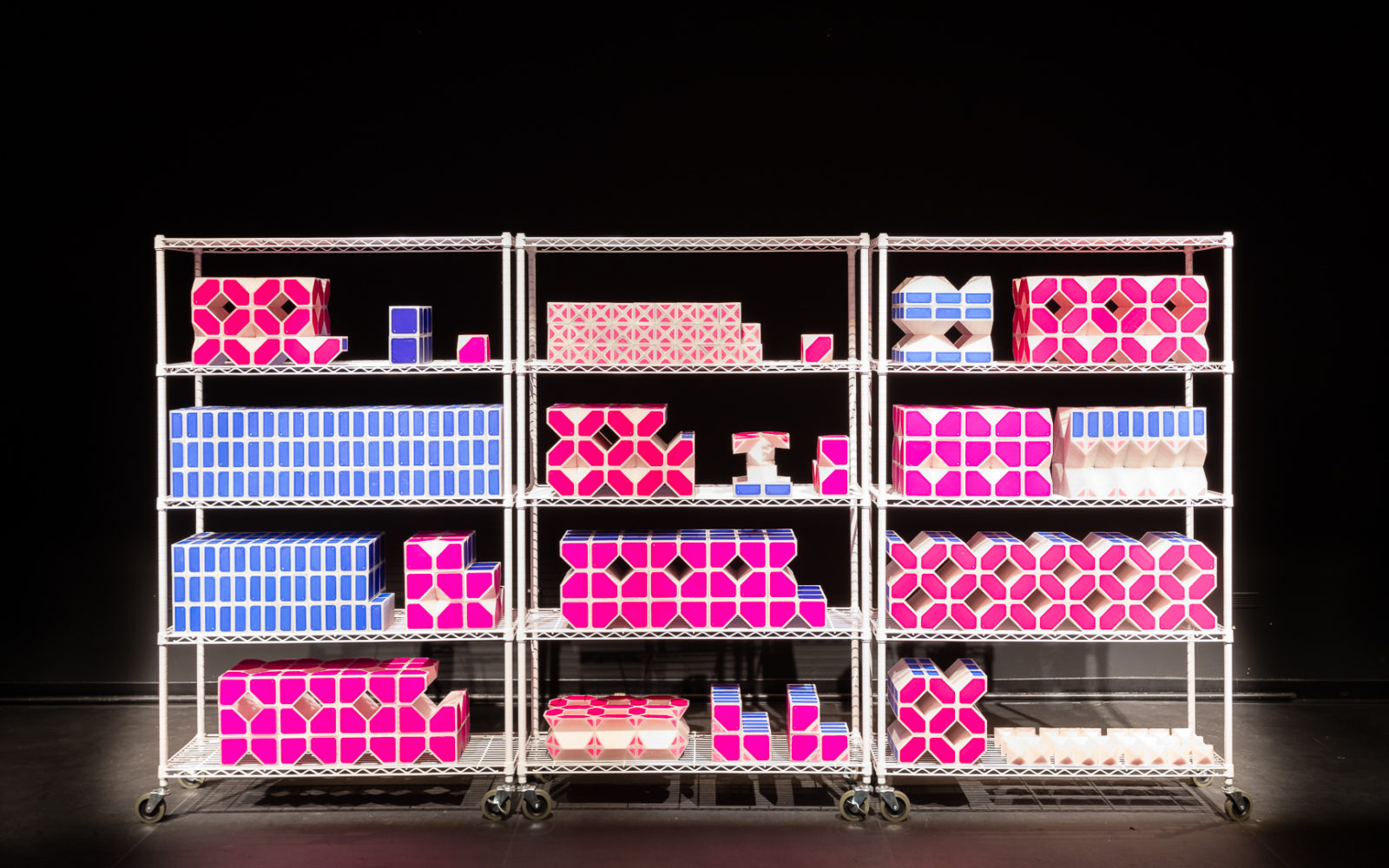

The camera is connected via Bluetooth to the car’s OBD-II port. Audio features are extracted using sound analysis software. Visual features are based on OpenCV and road scene segmentation using the SegNet deep convolutional encoder-decoder architecture and its Model Uncertainty. The camera metrics, audio, visual and automotive data is sorted from minimum to maximum value. They are mapped using a Uniform Manifold Approximation and Projection (UMAP) dimension reduction technique. The system has a custom-built interface with illuminated rotary encoders and a monitor installed on a road case that contains a subwoofer.

Project Page | François Quévillon

See also Mapping Machine Uncertainty & Rétroviseur and of course the classic “Run Motherfucker Run” (2001/2004) by Marnix de Nijs.