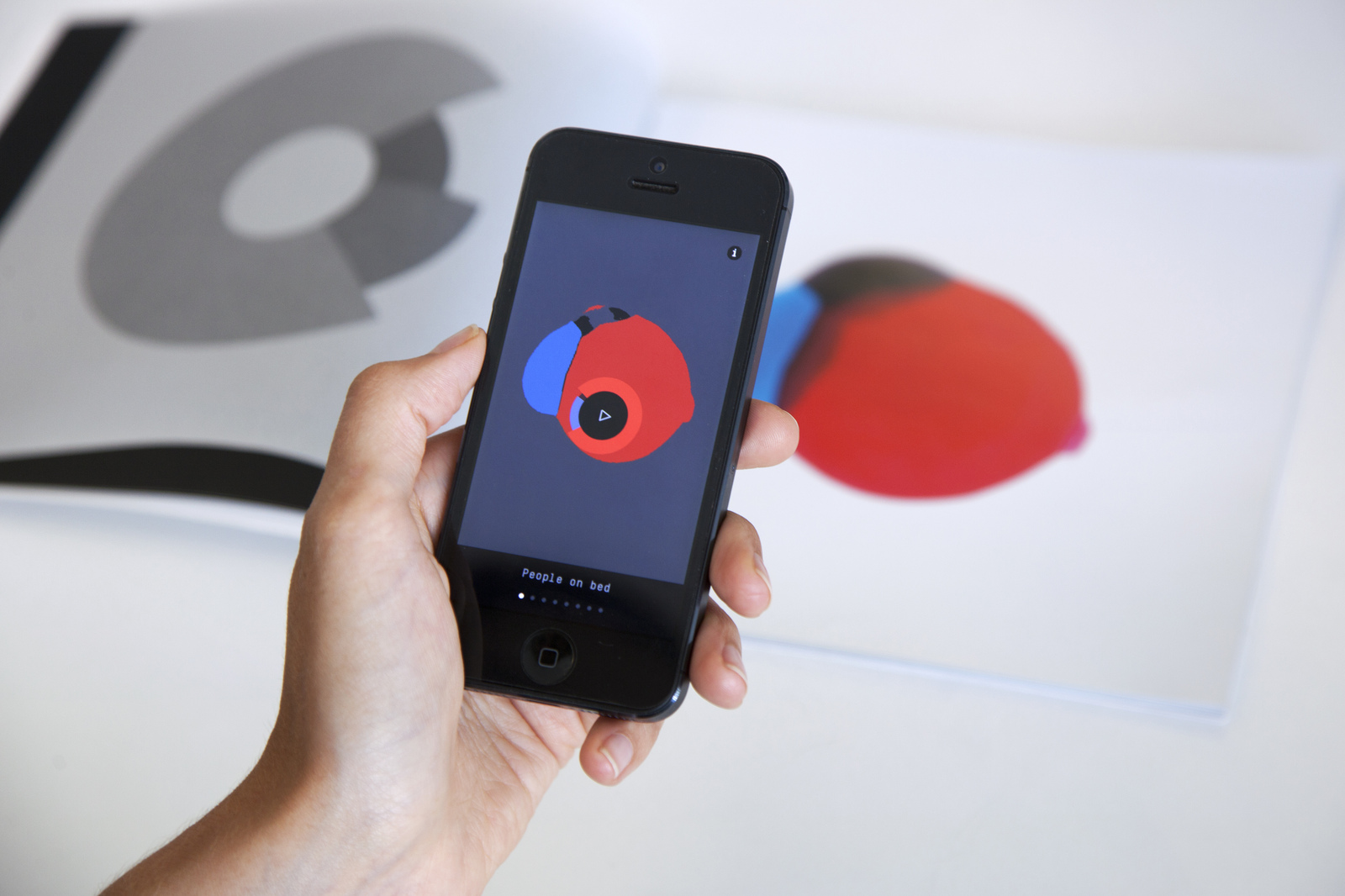

Since the first movie musical back in 1927, film audiences have delighted in seeing bodies in motion on the big screen. Movements etched into our minds. Scenes like Liza Minelli’s cabaret performance with a chair, Gene Kelly swinging joyously in the rain, the iconic lift scene in Dirty Dancing. These historic moments are now accessible to everyone in an interactive artwork.

Using advanced machine learning technology and a camera, this interactive installation tracks the participant’s movements and finds, in real-time, matching dance poses among thousands of scenes from famous musicals. When the participant stops moving, the clip from the matching scene from the musical starts playing, immersing the participant in the music and dance from musicals of different eras.

Conventionally film audiences are passive consumers, sitting in their seats, using their eyes and ears to absorb the experience. This installation combines this traditional experience with tracking technologies, machine learning and matching algorithms to turn the experience into a performative physical and personal interaction with the actors on screen. Crossing the boundaries between digital art, cinema, music, dance and computer science there is no longer a divide between the stars and participants and they are able to gesturally control their cultural icons. The boundaries are blurred and the action takes place both on the screen and in front of the screen.

Technical details

Using state-of-the-art machine learning we scanned hundreds of scenes to detect the skeletons of actors in famous musicals. Using that output, we built a vast database of poses that contain information about the exact pose of each actor and the exact point in the musical where it was detected.

We then use a camera and a skeleton tracker to detect the skeletal position of the participants. Having extracted that, we search our database for the closest match using a pose similarity metric. Once a closest match is returned, the pose metadata is sent via OSC to a custom, ultra-responsive media player written in C++/openFrameworks and the clip starts playing.

In order to optimise the search for the closest pose match we implemented a tree structure which returns closest match results in a few milliseconds making the whole installation very responsive to participants’ movements.

Tech used:

- python

- MediaPipe skeleton tracking library

- custom media player in C++/openFrameworks

- OSC messaging

- webcam

- laptop

- speakers

- large format projector

This project was designed and developed by Random Quark – creative technology studio.

www.randomquark.com

Random Quark | Instagram | Twitter