/machine learning (45)

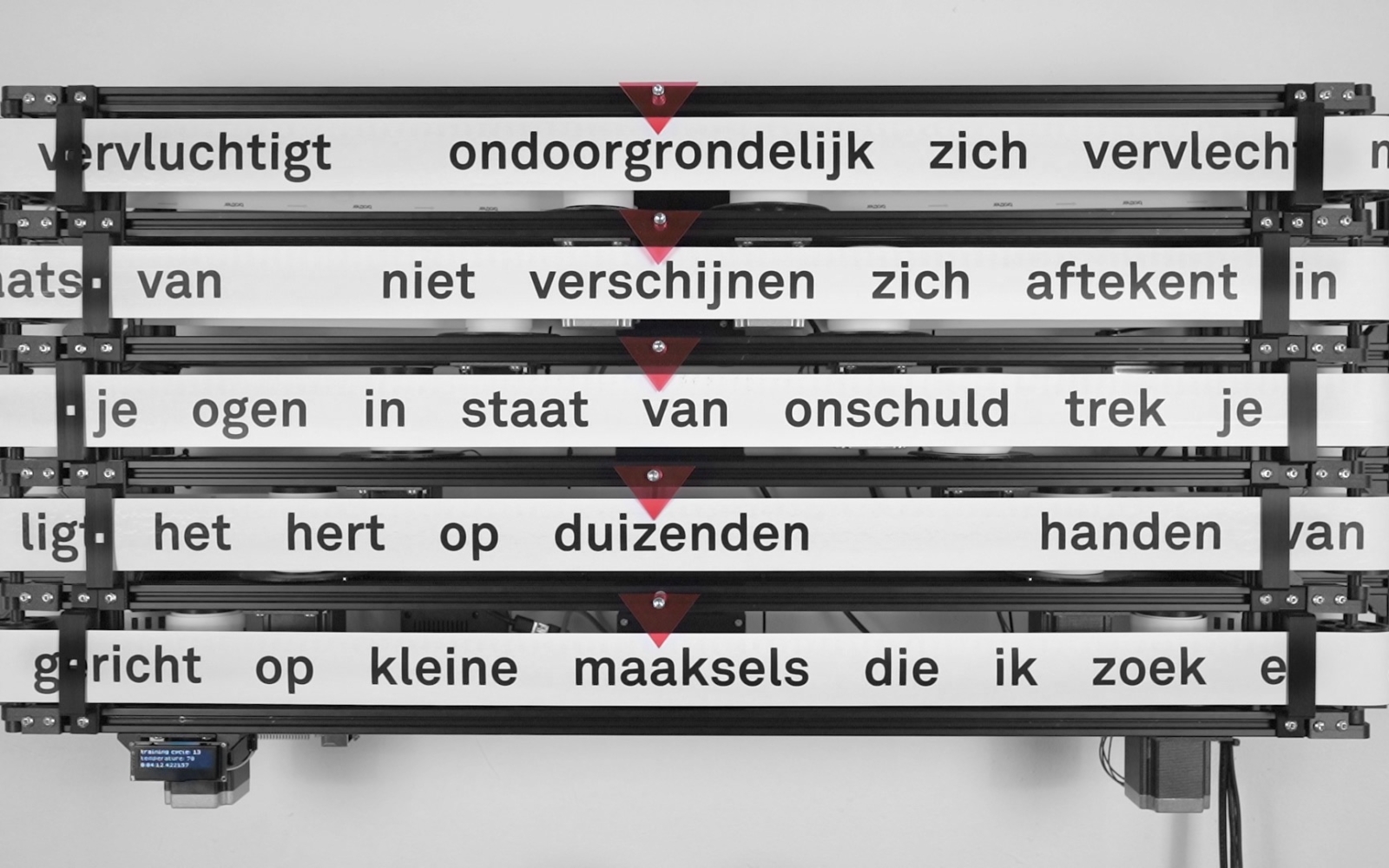

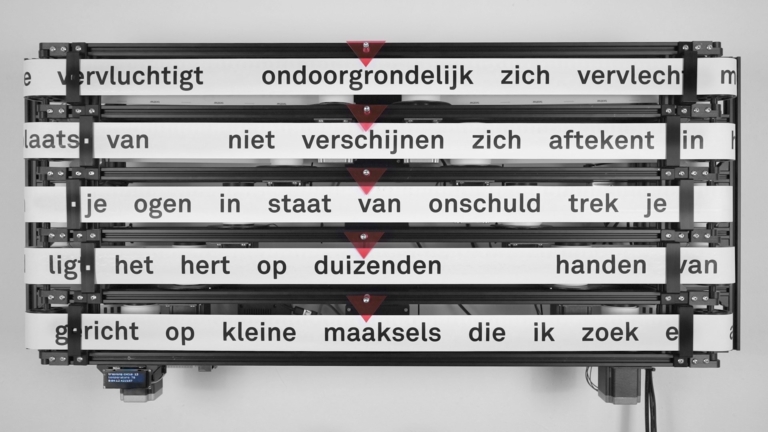

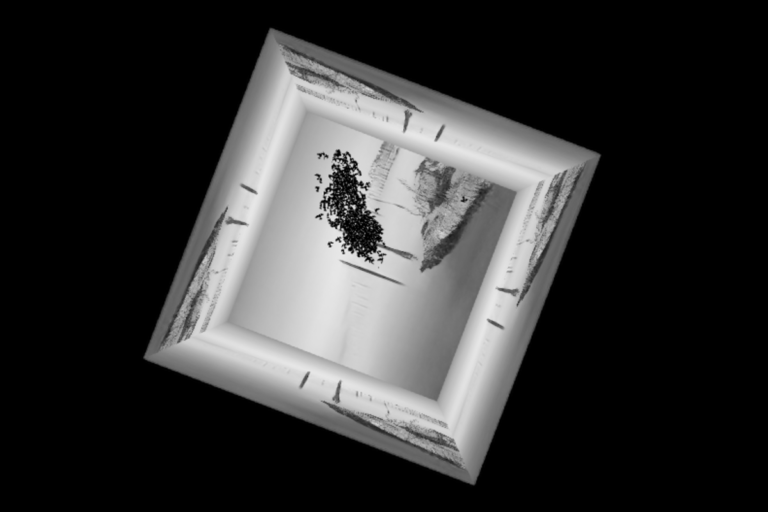

The Case for a Small Language Model is a speculative AI installation inspired by the work of Dutch composer and poet Rozalie Hirs. The installation shows the entire book printed on five 30 meter long strips of labelprinter paper that scroll in both directions As the five lines move back and forth, a vertical reading allows for new combinations to emerge.

dnose is an interactive sculpture in the shape of a nose created at NYU, Tisch School of the Arts ITP (Interactive Telecommunications Program) which combines image recognition and machine learning using a Raspberry Pi to predict the smell of any object placed underneath it.

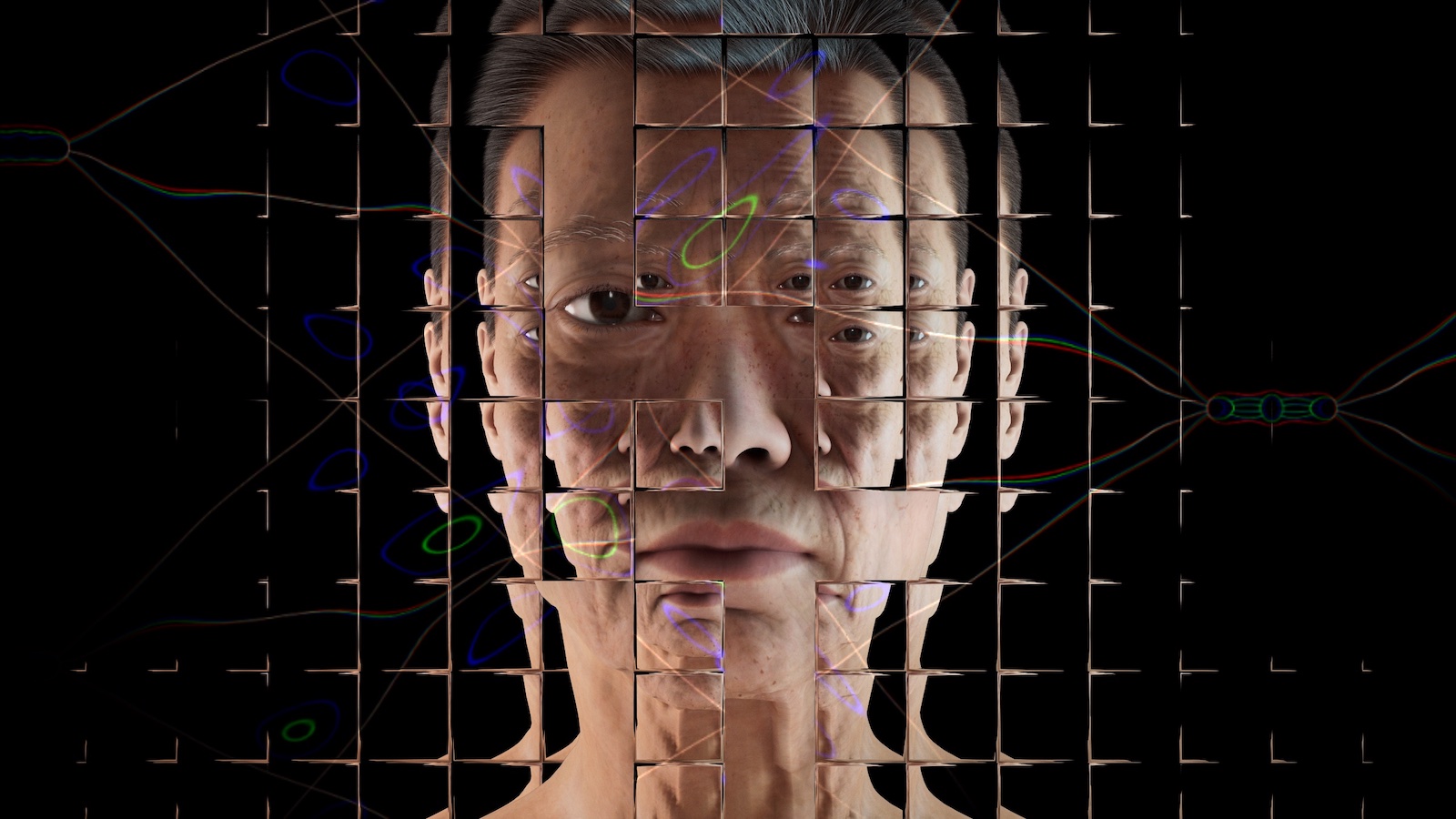

Latent Imaging and Imagining is part of an autoethnographic artistic research study to explore the concept of chrononormativity through an inverted perspective of nonconforming and how to negotiate a careful and queer mode of accessing childhood memories.

Elisava has partnered with the creative research lab IAM to launch a new Master in Design for Responsible Artificial Intelligence, a part-time and low residency programme that brings together designers, strategists, trend researchers, futurists, new media artists, cultural producers, journalists and creative technologists to tackle precisely these kinds of questions.

Created by Lauren Lee McCarthy & Kyle McDonald, Unlearning Language is an interactive installation and performance that uses machine learning to provoke the participants to find new understandings of language, undetectable to algorithms.

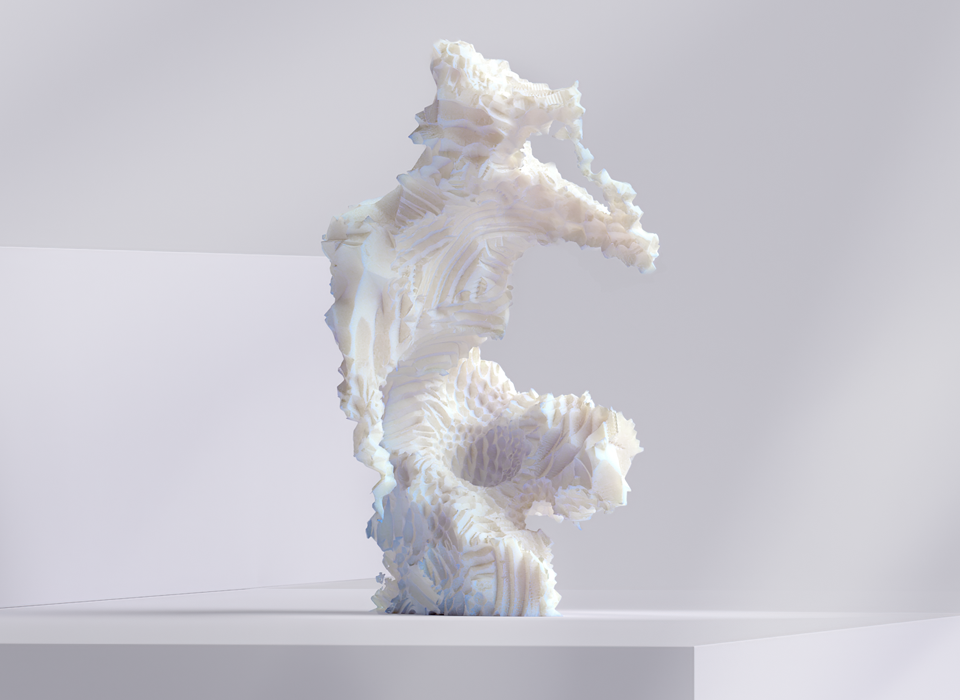

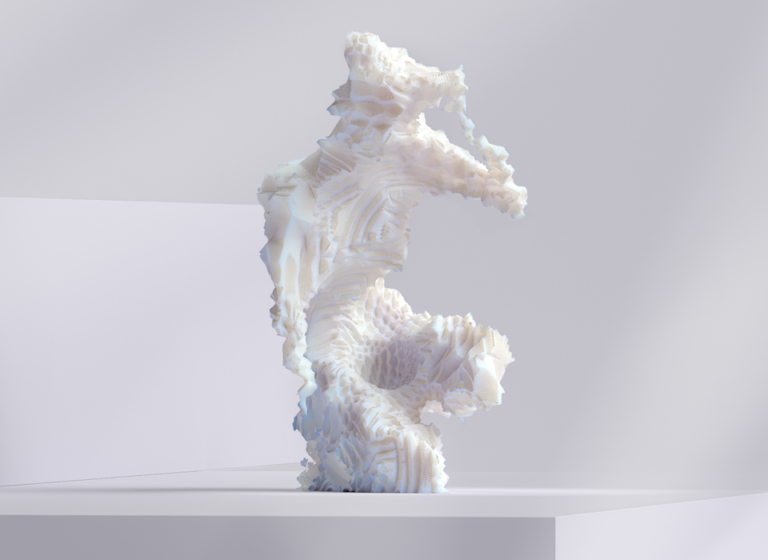

Imagined as a tool to provide assistance to a conventional approach to sculpting, here an AI model is developed to seek out strategies that provide a constant improvement to how a given form is achieved. By feeding it with different tools, rules and rewards through reinforcement learning, the team steer the process revealing unpredictable outcomes.

Since the first movie musical back in 1927, film audiences have delighted in seeing bodies in motion on the big screen. Movements etched into our minds. Scenes like Liza Minelli’s cabaret performance with a chair, Gene Kelly swinging joyously in the rain, the iconic lift scene in Dirty Dancing. These historic moments are now accessible…

Created by Benedikt Groß, Maik Groß and Thibault Durand, Mind the “Uuh” is an experimental training device helping everyone to become a better public speaker. The device is constantly listening to the sound of your voice, aiming to make you aware of “uuh” fill words.

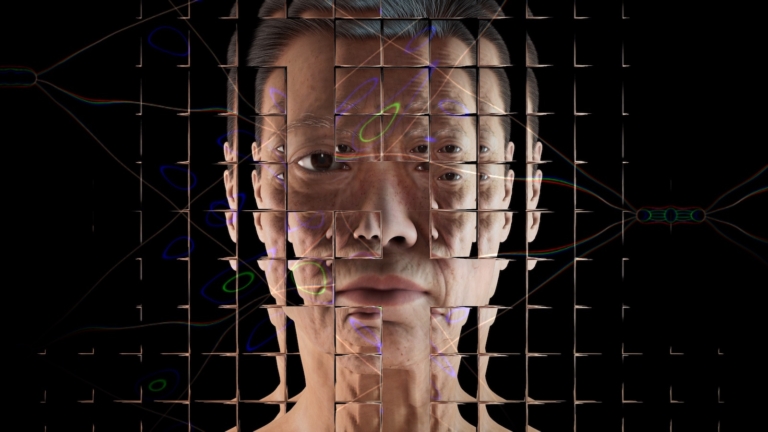

Created by Richard Vijgen, ‘Through Artificial Eyes’ is an interactive installation that lets the audience look at 558 episodes of VPRO Tegenlicht (Dutch Future Affairs Documentary series) through the eyes of a computer vision Neural Network.

‘Floating Codes’ is a site-specific light and sound installation that explores the inner workings and hidden aesthetics of artificial neural networks – the fundamental building blocks of machine learning systems or artificial intelligence. The exhibition space itself becomes a neural network that processes information, its constantly alternating environment (light conditions/day-night cycle) including the presence of the visitors.

The Lost Passage is an interactive experience for the web that creates a new digital home for an extinct species called passenger pigeon. It’s a digitally crafted world of a swarm of artificial pigeons, which seem to be inhabiting a sublime yet destitute memory of a lost landscape.

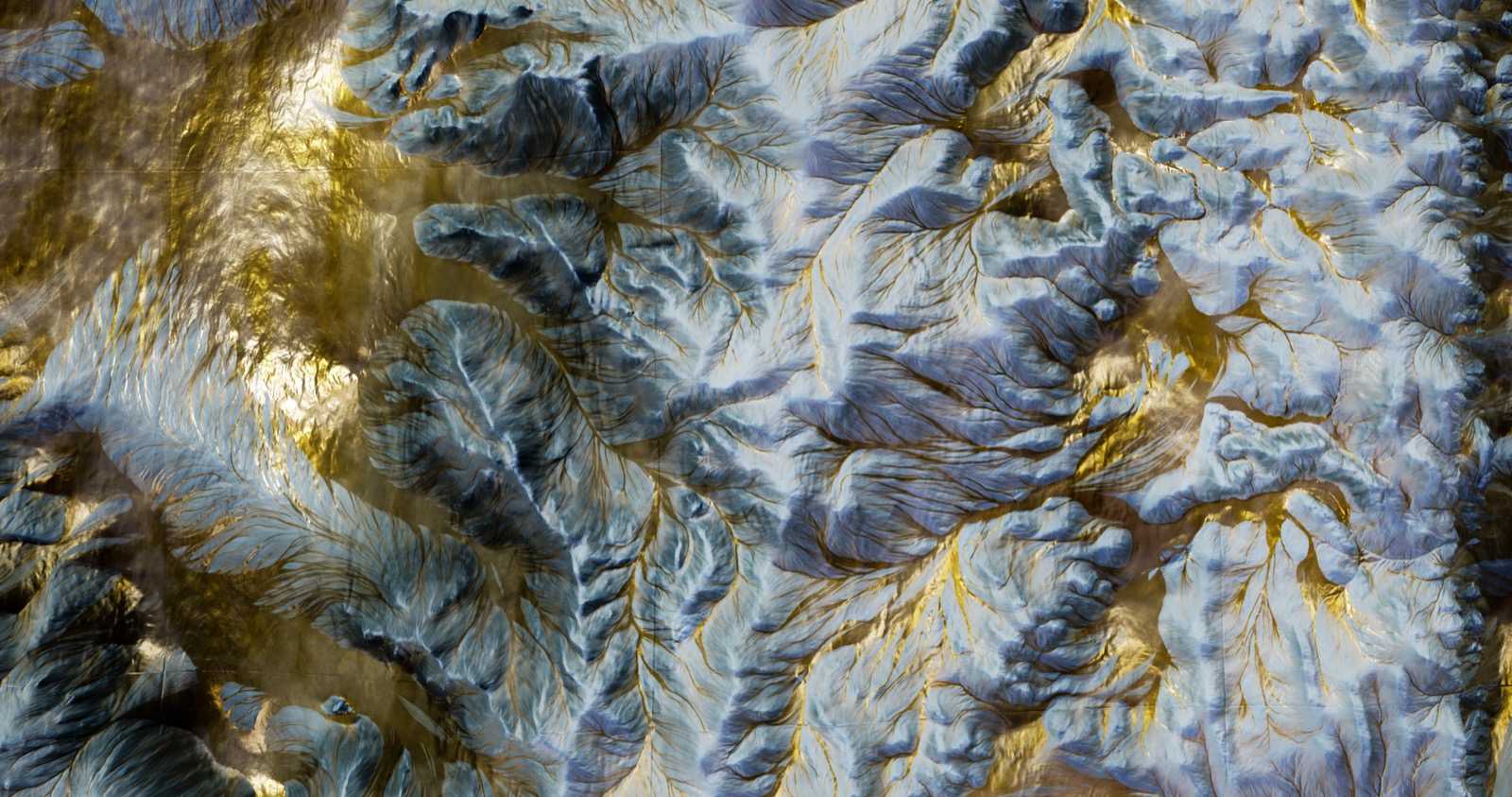

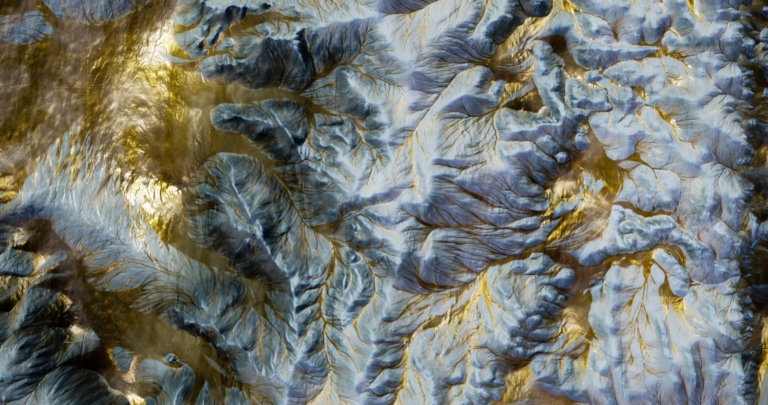

Created by Franz Rosati, ‘Latentscape’ depicts exploration of virtual landscapes and territories, supported by music generated by machine learning tools trained on traditional, folk and pop music with no temporal and cultural limitations.

Created by Douglas Edric Stanley, Inside Inside is an interactive installation remixing video games and cinema. In between, a neural network creates associations from its artificial understanding of the two, generating a film in real-time from gameplay using images from the history of cinema.

Created by Christian Mio Loclair (Waltz Binaire), ‘Blackberry Winter’ is an investigation into the possibilities of identify motion as a continuous walk in a latent space of situations.

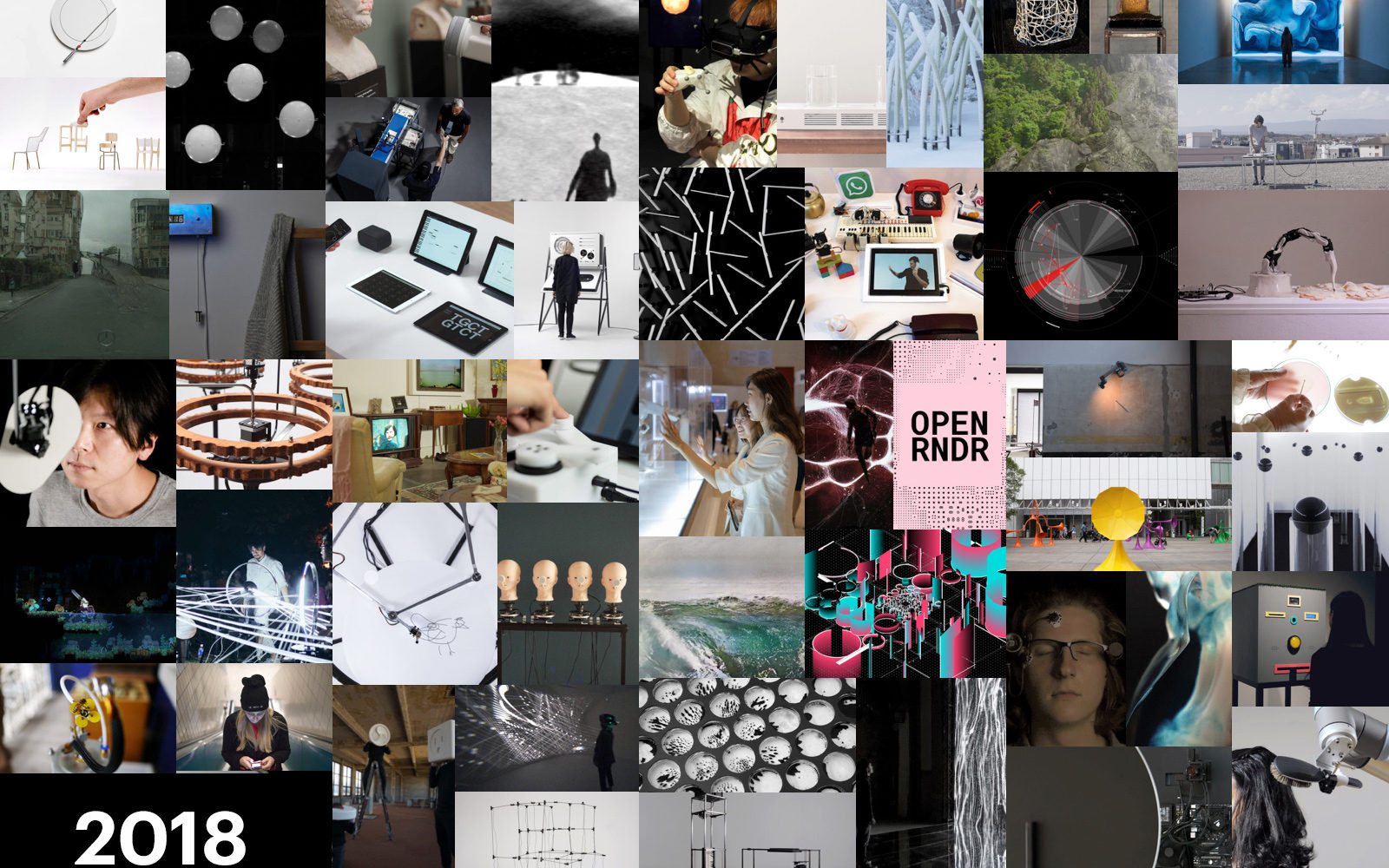

As 2018 comes to a close, we take a moment to look back at the outstanding work done this year. From spectacular machines, intricate tools and mesmerising performances and installations to the new mediums for artistic enquiry – so many great new projects have been added to the CAN archive! With your help we selected some favourites.

Uncanny Rd. is a drawing tool that allows users to interactively synthesise street images with the help of Generative Adversarial Networks (GANs). The project was created as a collaboration between Anastasis Germanidis and Cristobal Valenzuela to explore the new kinds of human-machine collaboration that deep learning can enable.

The chAIr Project is a series of four chairs created using a generative neural network (GAN) trained on a dataset of iconic 20th-century chairs with the goal to “generate a classic”. The results are semi-abstract visual prompts for a human designer who used them as a starting point for actual chair design concepts.

Created by Waltz Binaire, Narciss is a robot that uses artificial intelligence to analyse itself, thus reflecting on its own existence. Comprised of Google’s Tensorflow framework and a simple mirror, the experiment translates self-portraits of a digital body into lyrical guesses.

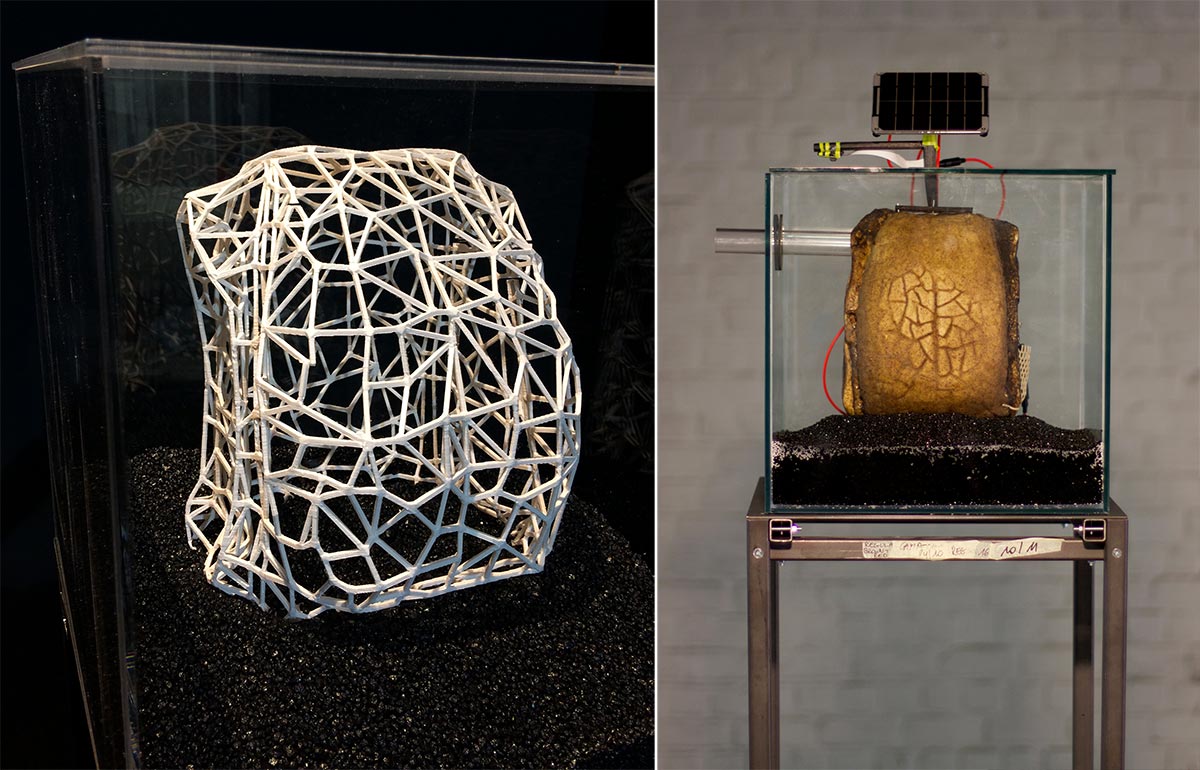

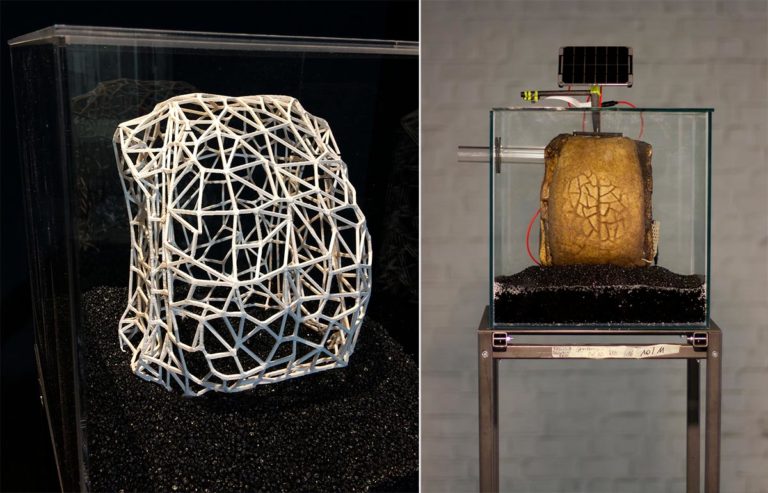

Created by AnneMarie Maes, Genesis of a Microbial Skin is a mixed media installations and a research project exploring the idea of Intelligent Beehives with a focus on smart materials, in particular microbial skin. The project is about predominantly growing Intelligent Guerilla Beehives from scratch, with living materials – just as nature does.

Artificial Imagination was a symposium organized by Ottawa’s Artengine this past winter that invited a group of artists to discuss the state of AI in the arts and culture. CAN was on hand to take in the proceedings, and given the emergence of documentation, we share videos and a brief report.

Latest in the series of experiments and explorations into neural networks by Memo Akten is a pre-trained deep neural network able to make predictions on live camera input – trying to make sense of what it sees, in context of what it’s seen before.

Created by Tore Knudsen, ‘Pour Reception’ is a playful radio that uses machine learning and tangible computing to challenge our cultural understanding of what an interface is and can be. Two glasses of water are turned into a digital material for the user to explore and appropriate.

Created by Selcuk Artut, Variable is an artwork that explores the signification of terms in artists’ statements. The artwork uses machine learning algorithms to thoughtfully problematise the limitations of algorithms and encourage the visitor to reflect on poststructuralism’s ontological questions.