Created by Aria Xiying Bao and Yubo Zhao at the MIT’s School of Architecture and Planning, Narratron is an interactive projector that augments hand shadow puppetry with AI-generated storytelling. Designed for all ages, it transforms traditional physical shadow plays into an immersive and phygital storytelling experience.

Hand shadow puppetry has been practiced as one of the oldest forms of storytelling in a transcultural context. To enhance that experience with multimodal artificial intelligence, Narratron allows users to interact with hand shadows with AI-generated auditory and visual outputs of the story their hand shadows are telling.

Aria Xiying Bao and Yubo Zhao

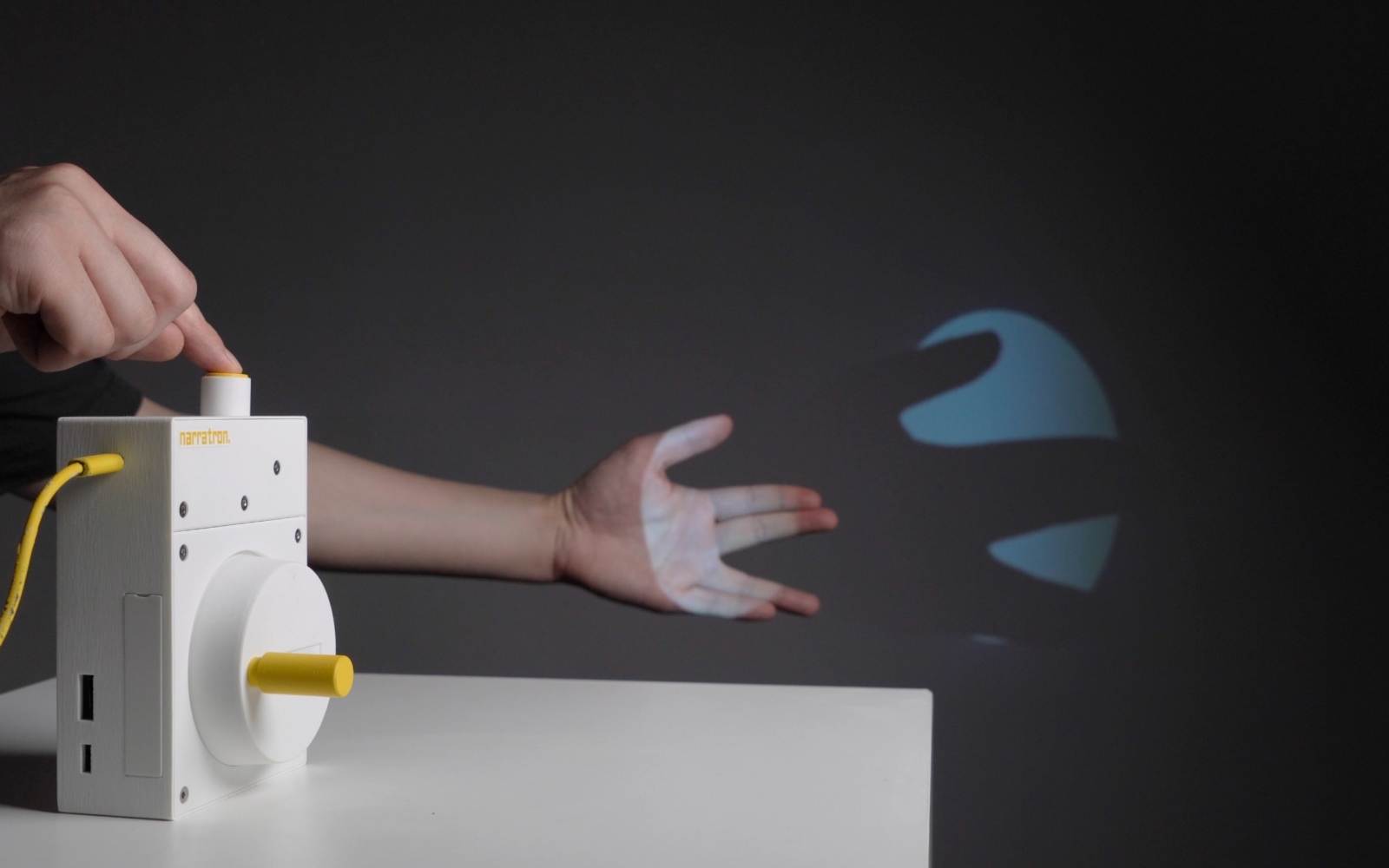

By placing animated hand forms in front of the on-device camera and being captured as shadow puppets, the embedded algorithms that utilize multiple AI models recognise the captured photo as a set of main characters of the story, create the story, generate the visual settings of the story and produce the narrator speech. Users are encouraged to develop the story and change the plot at any point by simply posing a different shadow as a new character, and the Human-AI interaction, at this moment, transcends the responder-respondee dichotomy as a tangible form of co-creation.

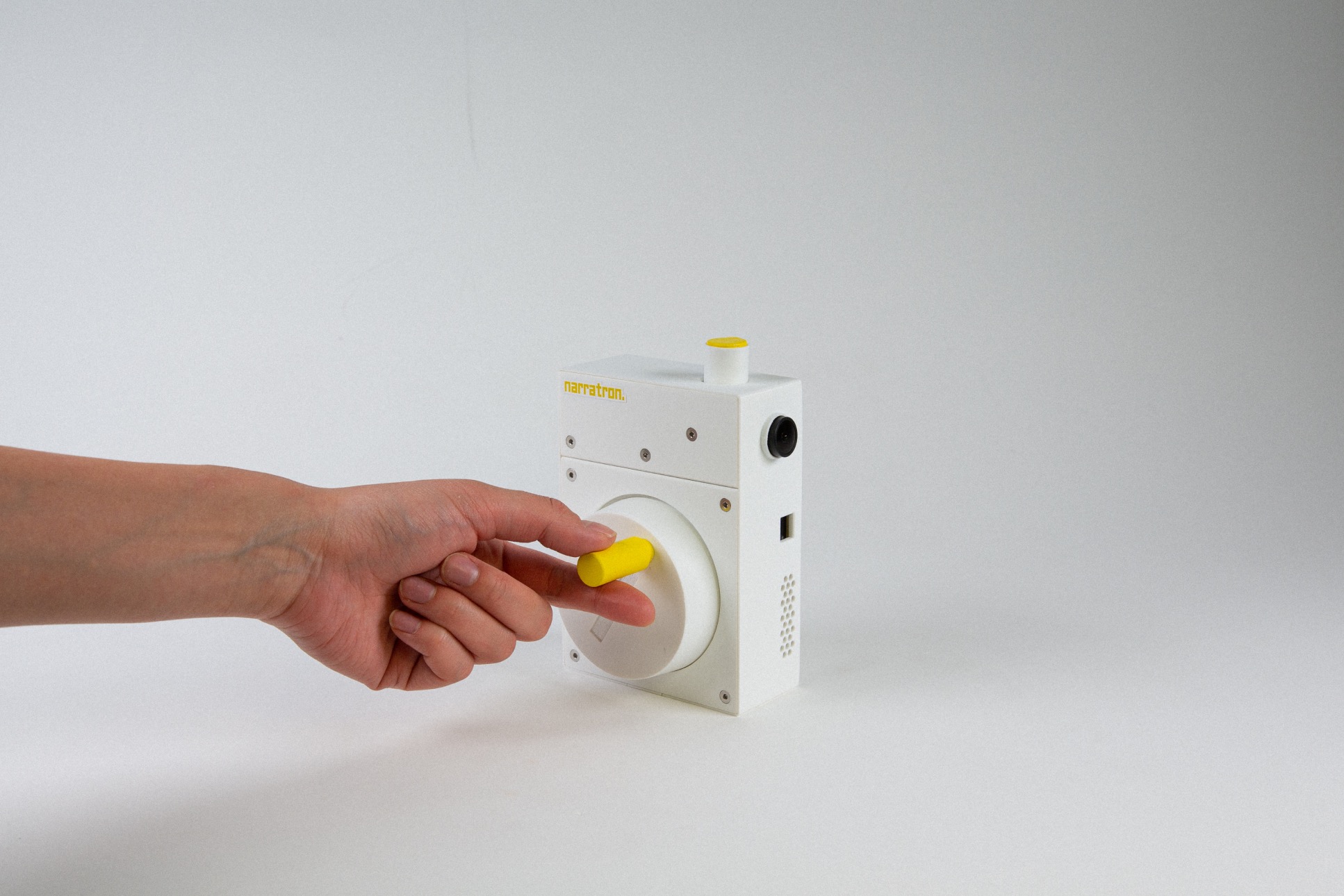

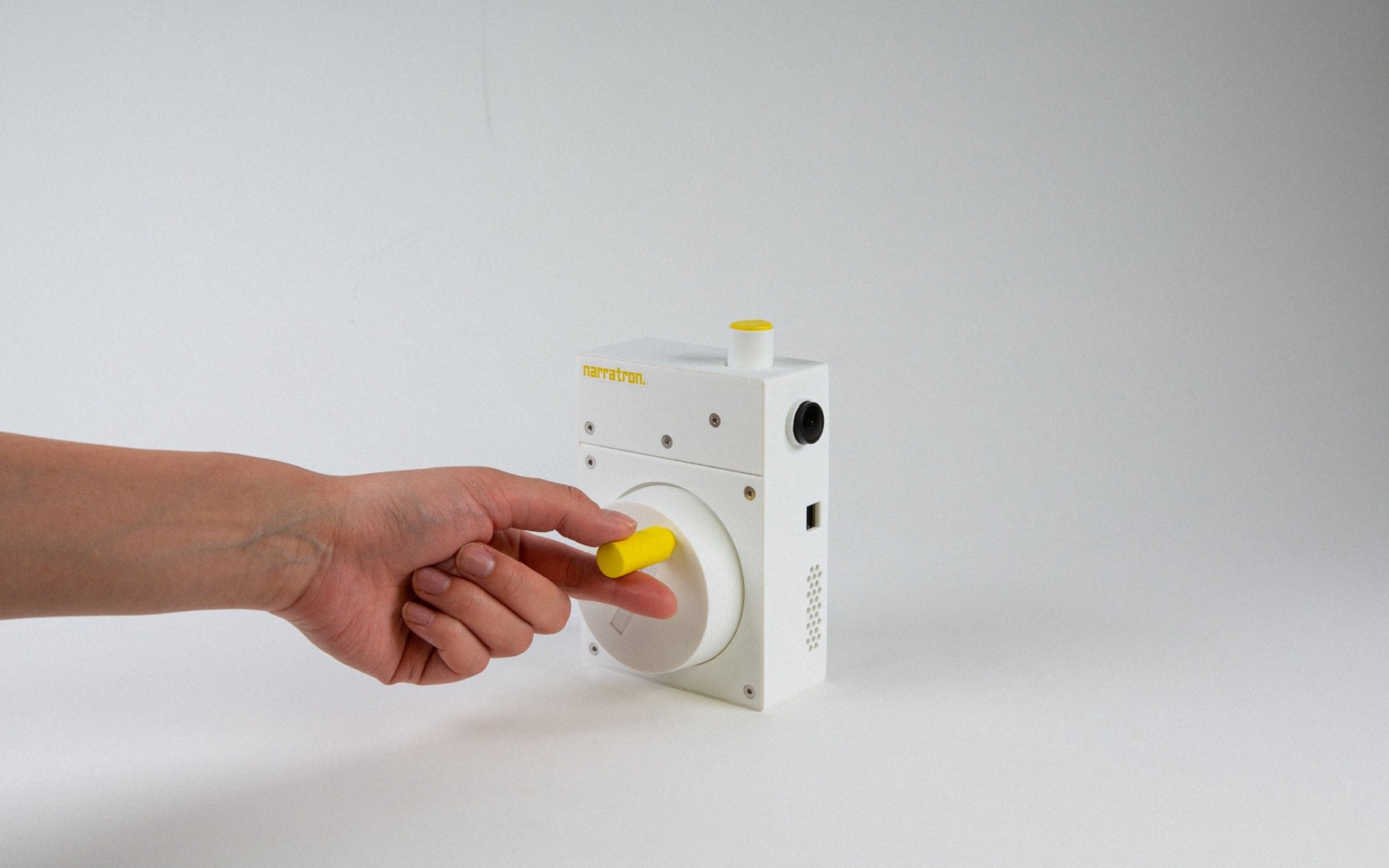

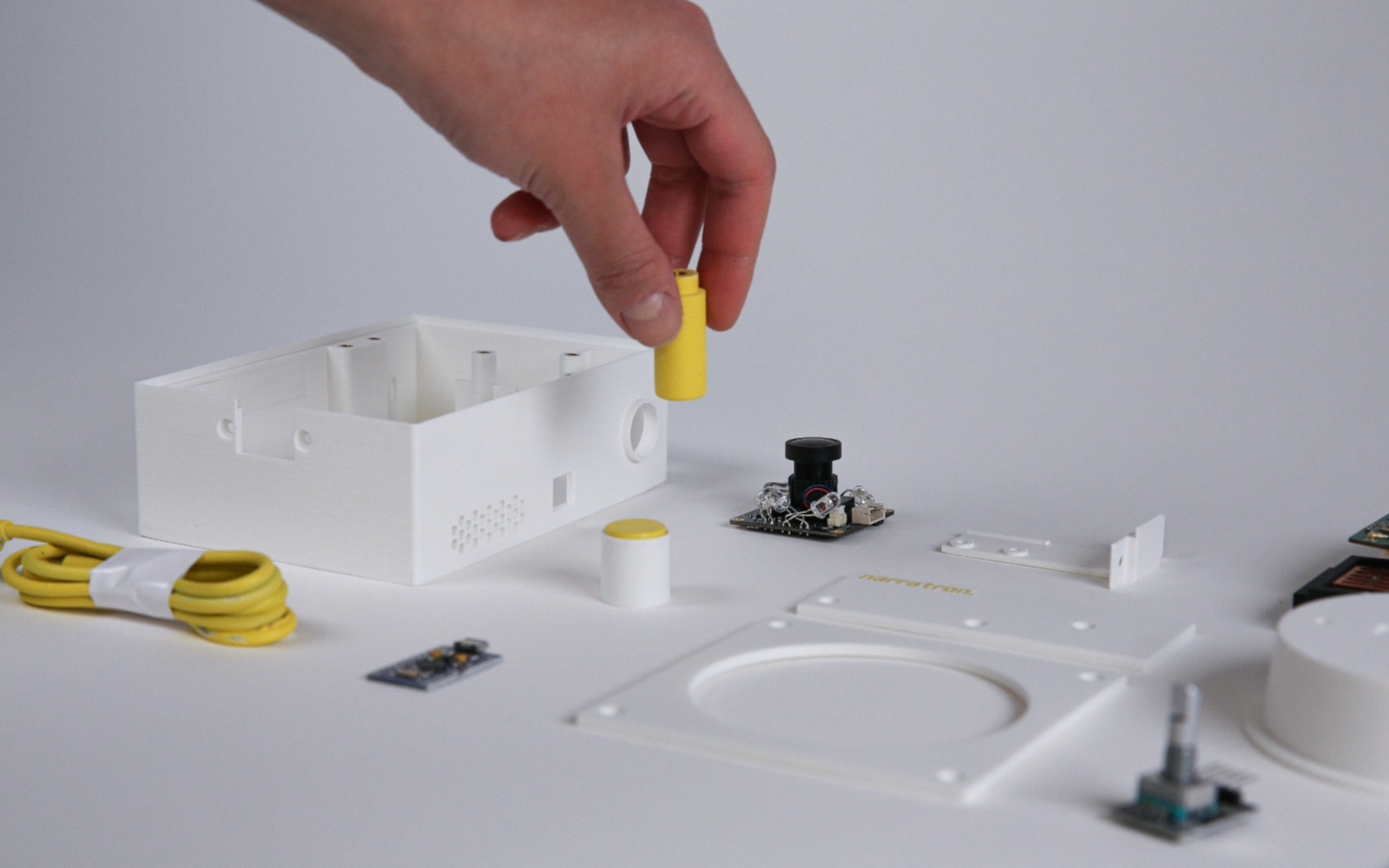

Inspired by the physical affordances of hand cranks and shutters which are the original interfaces of movie projectors and cameras, the physical form factor of Narratron takes on the minimalist design approach with an intent to create frictionless user experience. The projector unit made from commercial-grade SLS-printed nylon, speaker, microcontroller, and all other electronic components are compacted in the confined interior space of this standalone product, the edges are ergonomically bevelled employing functionalist and understated aesthetic, and the center of mass is properly positioned, which balances stability and portability, making it both a desktop device and a handheld gadget.

The history of hand shadow play is nearly untraceable which was prevalently practiced long before the existence of Greek shadow show Karagiozis or Chinese shadow puppetry Pi Ying Xi. It is a prelinguistic and transcultural form of storytelling that entertains and educates the younger generation; it is also a stimuli of creative production, by mimicking the things we see, and by telling the stories we relate. Narratron, in that sense, has deeply embedded AI into this intelligent collective effort of hands, eyes, and brains as a true “fairytale copilot”. Its nature of multimodality that combines visual, auditory, tactile, and textual I/O, supported by the collaboration of LLM, image classifier, speech synthesizer and diffusion models, demonstrates how seamlessly we are able to make bodily interactions with AI. Through bridging the digital and the physical, we are now connecting the ancient and the future.

Aria Xiying Bao and Yubo Zhao

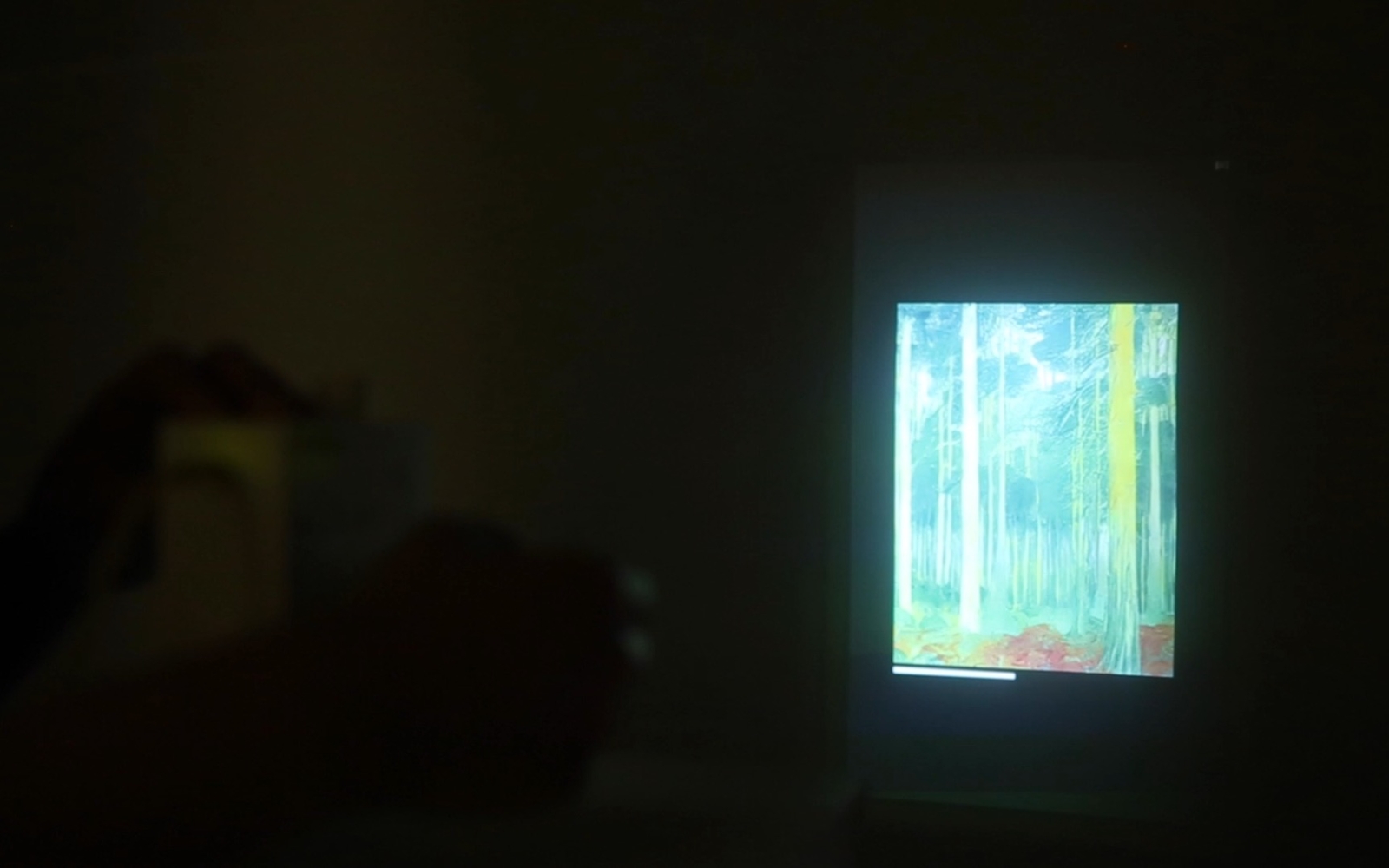

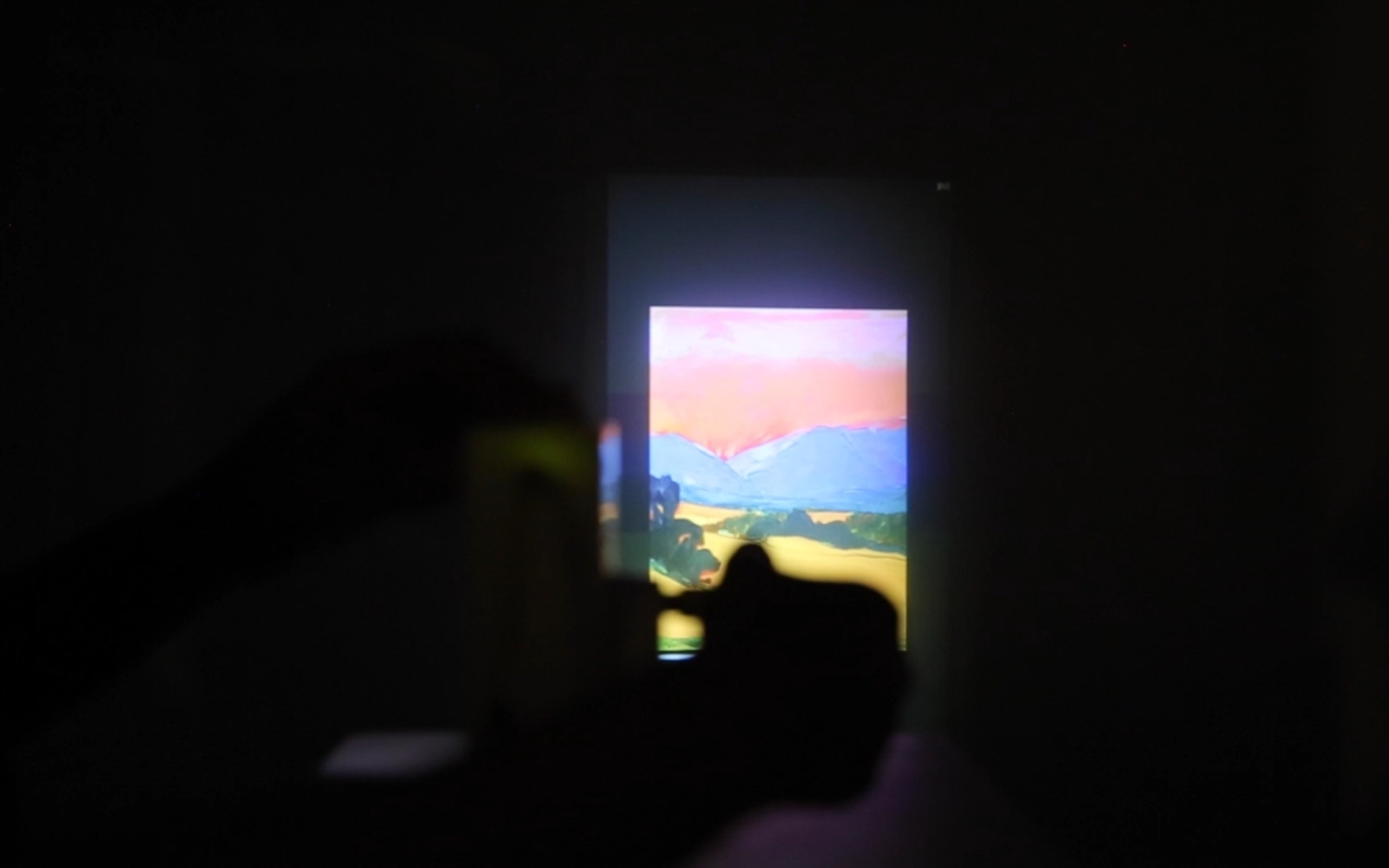

Narratron is designed to offer users a captivating and immersive experience that merges the art of hand shadow puppetry with cutting-edge AI technology. The user’s experience with Narratron begins with the startup screen, which creates a serene and focused ambiance, setting the stage for an immersive journey. As the user turns on Narratron, they are greeted by the instructions, preparing them for the experience that lies ahead. Once the startup screen fades away, the user is free to explore and play with their hand shadows in any way they wish. They can experiment with different shapes, sizes, and movements while Narratron’s camera captures the intricate hand shadow shapes created by the user. The captured hand shadow shapes are then analyzed by trained image classifiers integrated into Narratron. These algorithms interpret the hand shadows and translate them into animal keywords. The keywords serve as the foundation for the next step of the process: generating a complete story.

To generate the story, Narratron employs the GPT-3.5 language model. The animal keyword identified from the user’s hand shadow is processed by GPT-3.5 and generates a story seamlessly combining plotlines, dialogues, and descriptive elements. While the story is being generated, Narratron simultaneously generates a corresponding image using Stable Diffusion that represents the animal associated with the user’s hand shadow. This image is then projected onto the surface, adding a visual component to the audio experience, enhancing user’s connection to the narrative.

To initiate the storytelling experience and progress to the next chapter, the user interacts with Narratron by spinning the knob, reminiscent of vintage movie projectors, intended to add a sense of nostalgia and tactile engagement to the overall user experience. Each rotation of the knob signifies a progression, unlocking a new chapter and revealing fresh elements of the narrative. The user’s active participation in this process creates a sense of agency, allowing them to dictate the pacing and flow of the storytelling experience.

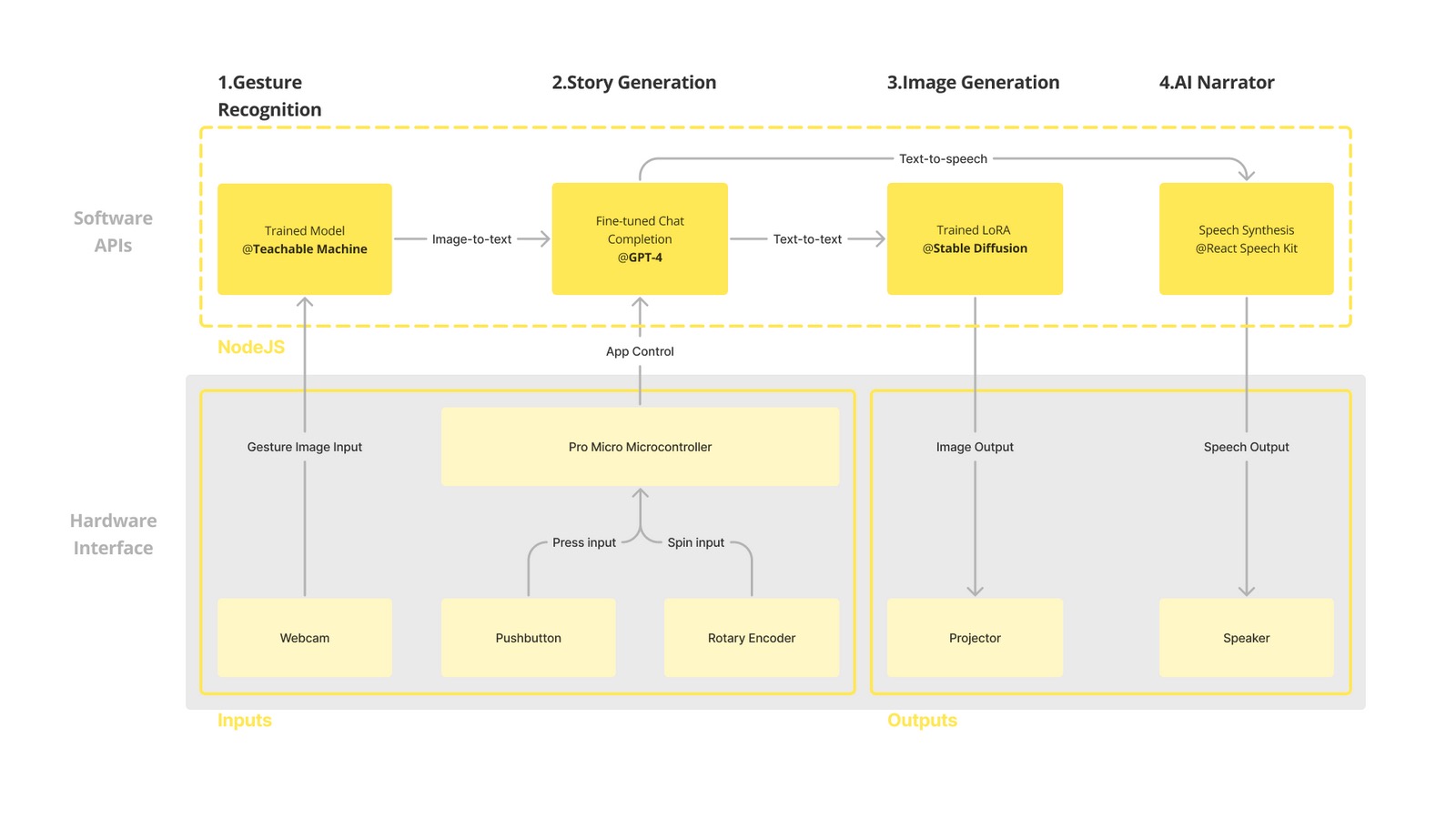

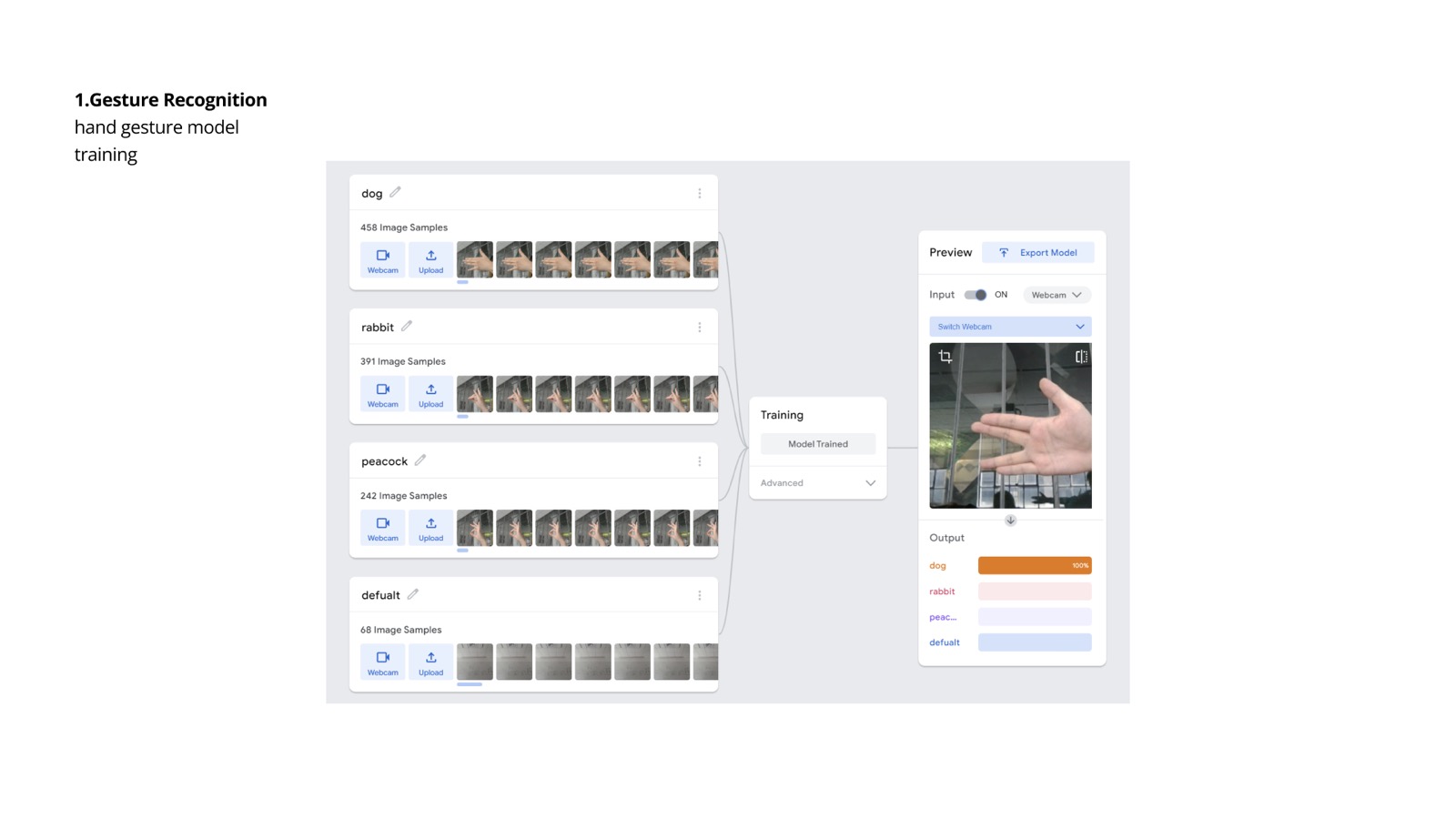

Hand Gesture Generation

- Teachable Machine: To enable hand gesture recognition, Narratron utilizes trained image classifiers from Teachable Machine, an online platform that allows for the training of custom machine learning models. The hand gesture recognition model is trained using Teachable Machine’s interface, which captures and analyzes various hand shadow shapes to recognize and interpret user movements accurately.

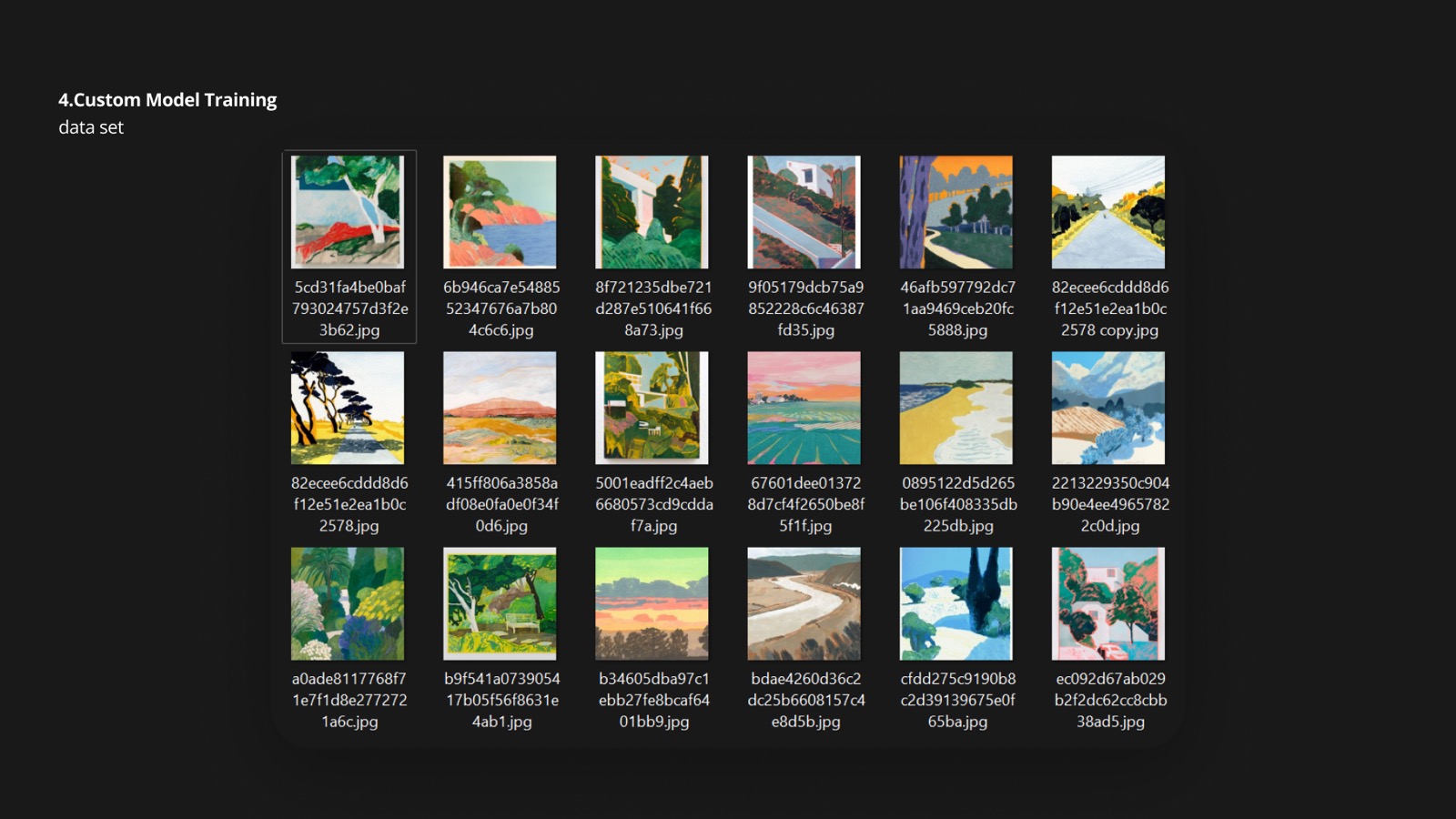

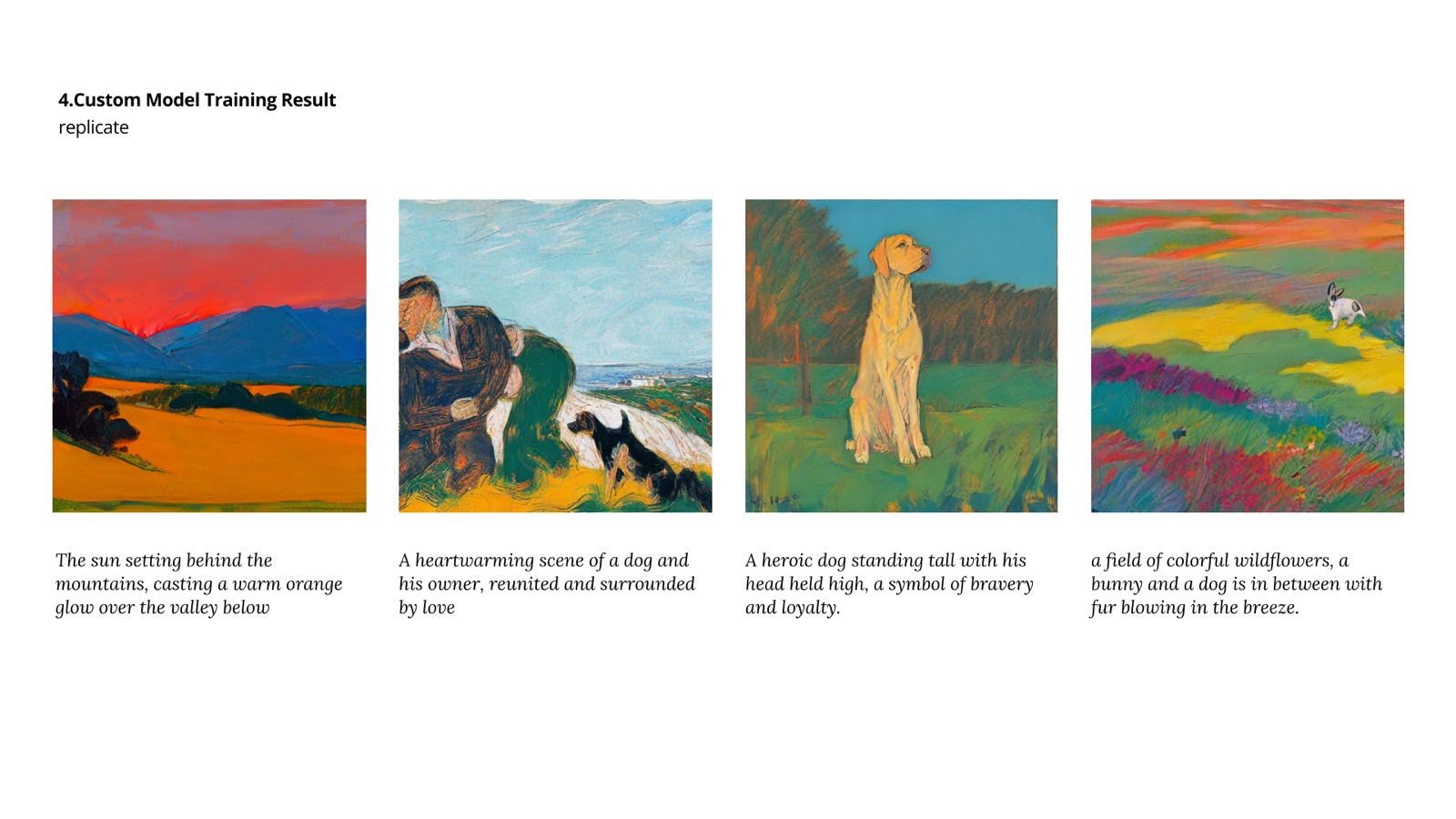

Custom Model Training

- Replicate: In order to train the image generation model, Narratron employs the Replicate framework. This framework allows for the development and training of custom deep learning models. We first collect images for dataset, which are with childlike painting style featuring bright and vibrant colors, simple shapes, and whimsical details. We then train our model on Replicate with the dataset. The trained model is used to generate images in Stable Diffusion based on the generated prompt, which is based on the generated storyline.

Story Generation

- GPT-3.5-turbo: Narratron employs the powerful GPT-3.5-turbo model developed by OpenAI for generating dynamic and engaging stories. The model is trained on a vast corpus of text, including literary works, articles, and other narrative sources. To specifically train the story generation model for Narratron, The Ugly Duckling story might have been used as a training dataset, providing a foundation for generating narratives based on user inputs and animal keywords.

Backend

- Data Processing and Integration: The backend of Narratron handles data processing and integration tasks. It receives and processes user input from the hand gesture recognition model and passes it to the story generation and image generation components. It also manages the integration between the different components of the system to deliver a seamless and synchronized user experience.

Image Generation

- Stable Diffusion: For image generation, Narratron utilizes the Stable Diffusion algorithm. This algorithm leverages deep generative models to produce visually appealing and contextually relevant images. The image generation model has been trained using a custom dataset created specifically for Narratron. The training data likely includes various animal images and visual elements related to storytelling.

Summary

- Narratron’s tech stack combines a variety of powerful technologies and frameworks. Teachable Machine is used for hand gesture recognition, GPT-3.5-turbo for story generation, Stable Diffusion for image generation, and Replicate for custom model training. This comprehensive tech stack enables Narratron to deliver an interactive storytelling experience that blends user creativity with AI-generated narratives and visuals.

Project Page | Aria Xiying Bao | Yubo Zhao

Created for the Interaction Intelligence on Large Language Objects (LLO) class lead by Marcelo Coelho, part of MIT’s Art & Design Major (School of Architecture and Planning).